diff --git a/docs/source/en/using-diffusers/other-formats.md b/docs/source/en/using-diffusers/other-formats.md

index df3df92f0693..11afbf29d3f2 100644

--- a/docs/source/en/using-diffusers/other-formats.md

+++ b/docs/source/en/using-diffusers/other-formats.md

@@ -70,41 +70,32 @@ pipeline = StableDiffusionPipeline.from_single_file(

-#### LoRA files

+#### LoRAs

-[LoRA](https://hf.co/docs/peft/conceptual_guides/adapter#low-rank-adaptation-lora) is a lightweight adapter that is fast and easy to train, making them especially popular for generating images in a certain way or style. These adapters are commonly stored in a safetensors file, and are widely popular on model sharing platforms like [civitai](https://civitai.com/).

+[LoRAs](../tutorials/using_peft_for_inference) are lightweight checkpoints fine-tuned to generate images or video in a specific style. If you are using a checkpoint trained with a Diffusers training script, the LoRA configuration is automatically saved as metadata in a safetensors file. When the safetensors file is loaded, the metadata is parsed to correctly configure the LoRA and avoids missing or incorrect LoRA configurations.

-LoRAs are loaded into a base model with the [`~loaders.StableDiffusionLoraLoaderMixin.load_lora_weights`] method.

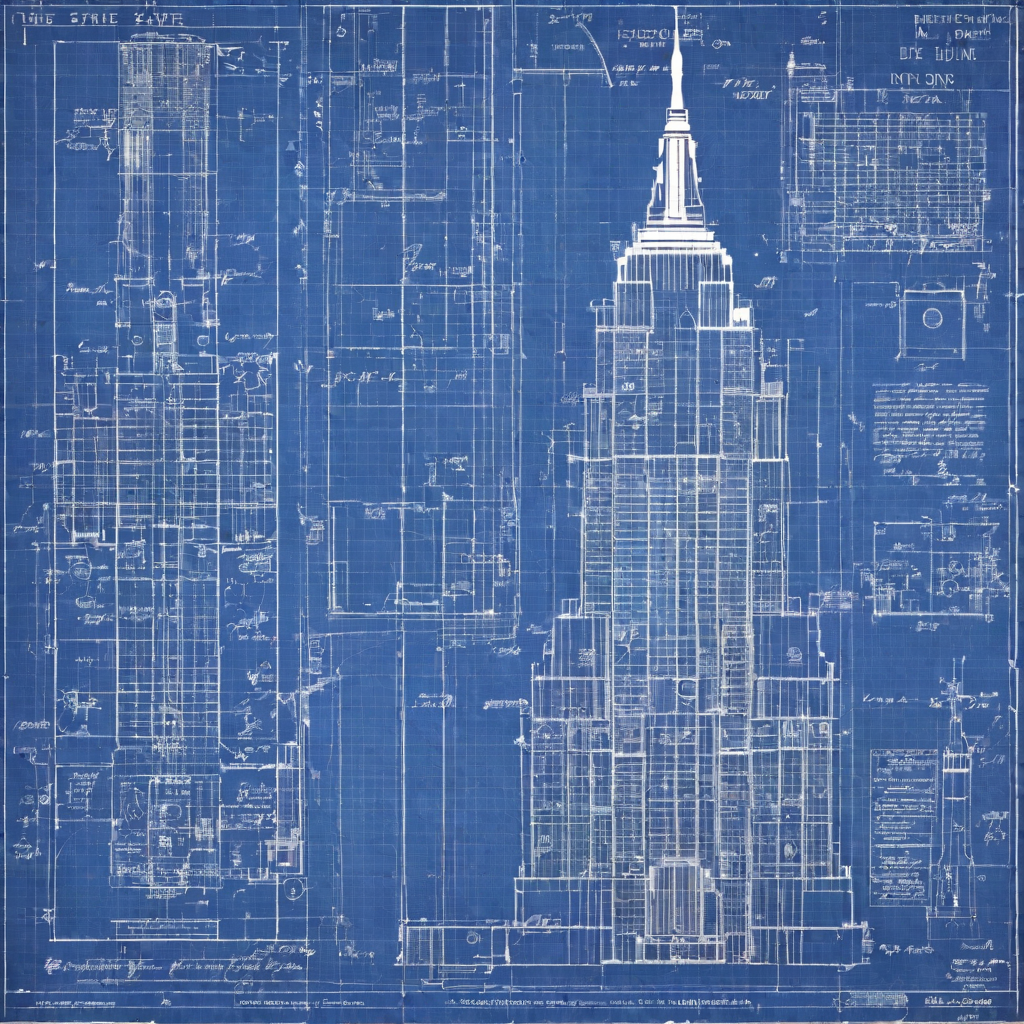

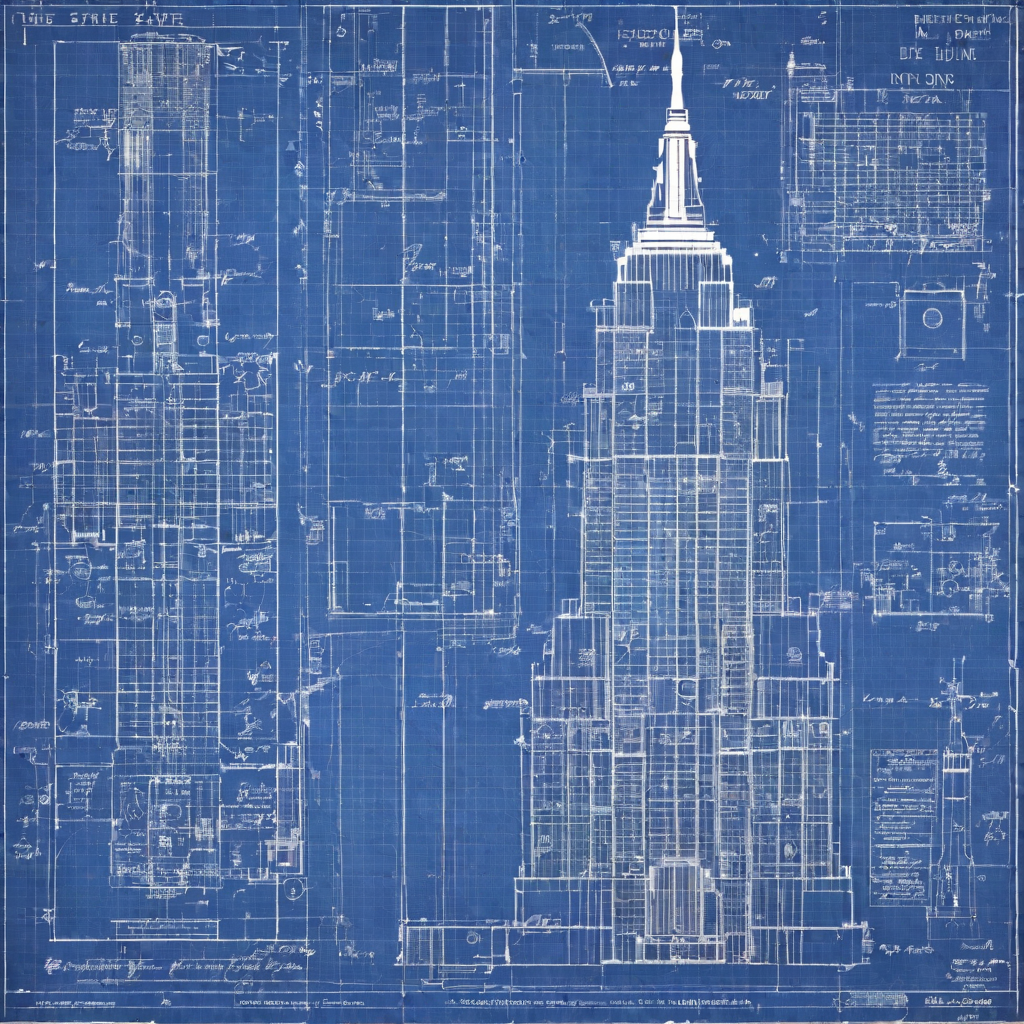

+The easiest way to inspect the metadata, if available, is by clicking on the Safetensors logo next to the weights.

+

+

+

+

-

-

+

+ +

+ -

-