- **Offline**: NGINX Agent is connected but the NGINX instance isn't running, isn't installed, or can't communicate with NGINX Agent.

- **Unavailable**: The connection between NGINX Agent and NGINX One has been lost or the instance has been decommissioned.

- **Unknown**: The current state can't be determined at the moment. | +| **NGINX versions by instance** | See which NGINX versions are in use across your instances. | | +| **Operating systems** | Find out which operating systems your instances are running on. | | +| **Certificates** | Monitor the status of your SSL certificates to know which are expiring soon and which are still valid. | | +| **Config recommendations** | Get configuration recommendations to optimize your instances' settings. | | +| **CVEs (Common Vulnerabilities and Exposures)** | Evaluate the severity and number of potential security threats in your instances. | - **Major**: Indicates a high-severity threat that needs immediate attention.

- **Medium**: Implies a moderate threat level.

- **Minor** and **Low**: Represent less critical issues that still require monitoring.

- **Other**: Encompasses any threats that don't fit the standard categories. | +| **CPU utilization** | Track CPU usage trends and pinpoint instances with high CPU demand. | | +| **Memory utilization** | Watch memory usage patterns to identify instances using significant memory. | | +| **Disk space utilization** | Monitor how much disk space your instances are using and identify those nearing capacity. | | +| **Unsuccessful response codes** | Look for instances with a high number of HTTP server errors and investigate their error codes. | | +| **Top network usage** | Review the network usage and bandwidth consumption of your instances. | | + +{{

/etc/nginx/ssl/example.com.key | Typically purchased from a Certificate Authority (CA) | +| Repository access | /etc/ssl/nginx/nginx-repo.crt

/etc/ssl/nginx/nginx-repo.key | Supports access to repositories to download and install NGINX packages | +| NGINX Licensing | /etc/ssl/nginx/server.crt

/etc/ssl/nginx/server.key | Supports access to repositories. Based on licenses downloaded from https://my.f5.com/ | +{{

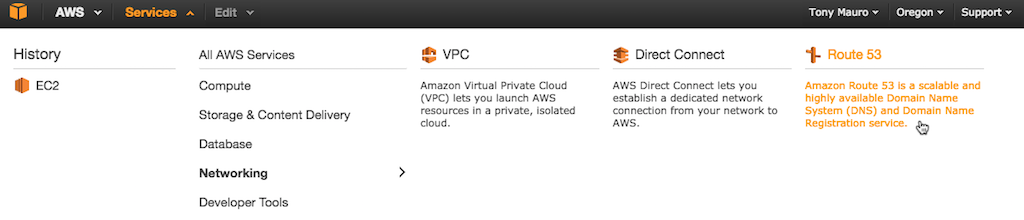

-Route 53 is a Domain Name System (DNS) service that performs global server load balancing by routing each request to the AWS region closest to the requester's location. This guide uses two regions: **US West (Oregon)** and **US East (N. Virginia)**.

+Route 53 is a Domain Name System (DNS) service that performs global server load balancing by routing each request to the AWS region closest to the requester's location. This guide uses two regions: {{

-Route 53 is a Domain Name System (DNS) service that performs global server load balancing by routing each request to the AWS region closest to the requester's location. This guide uses two regions: **US West (Oregon)** and **US East (N. Virginia)**.

+Route 53 is a Domain Name System (DNS) service that performs global server load balancing by routing each request to the AWS region closest to the requester's location. This guide uses two regions: {{ @@ -87,7 +87,7 @@ Create a _hosted zone_, which basically involves designating a domain name to be

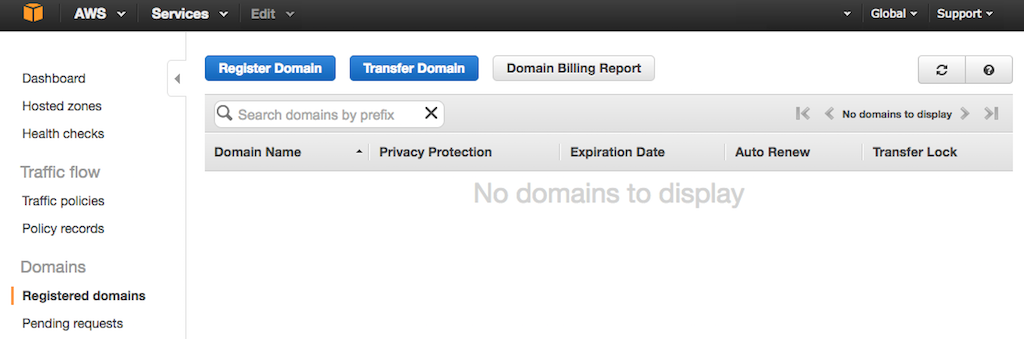

@@ -87,7 +87,7 @@ Create a _hosted zone_, which basically involves designating a domain name to be

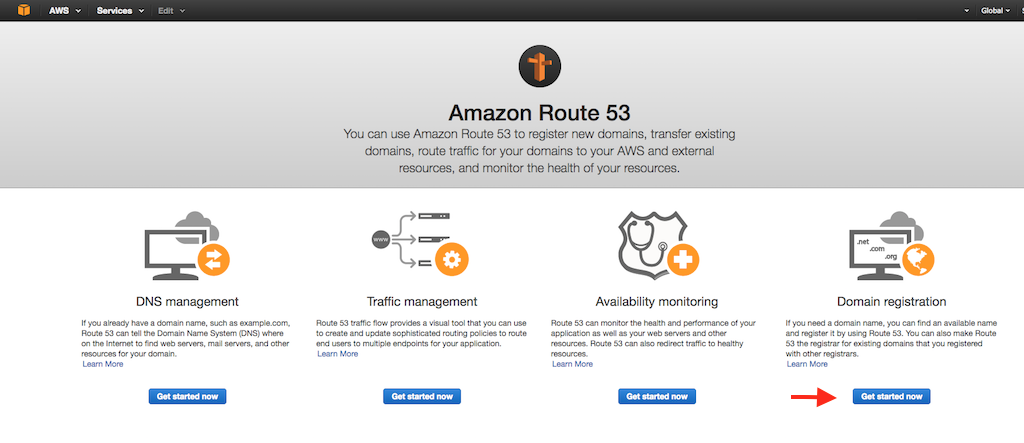

- If you see the Route 53 home page instead, access the **Registered domains** tab by clicking the Get started now button under **Domain registration**.

+ If you see the Route 53 home page instead, access the **Registered domains** tab by clicking the Get started now button under {{

- If you see the Route 53 home page instead, access the **Registered domains** tab by clicking the Get started now button under **Domain registration**.

+ If you see the Route 53 home page instead, access the **Registered domains** tab by clicking the Get started now button under {{ @@ -124,19 +124,19 @@ Create records sets for your domain:

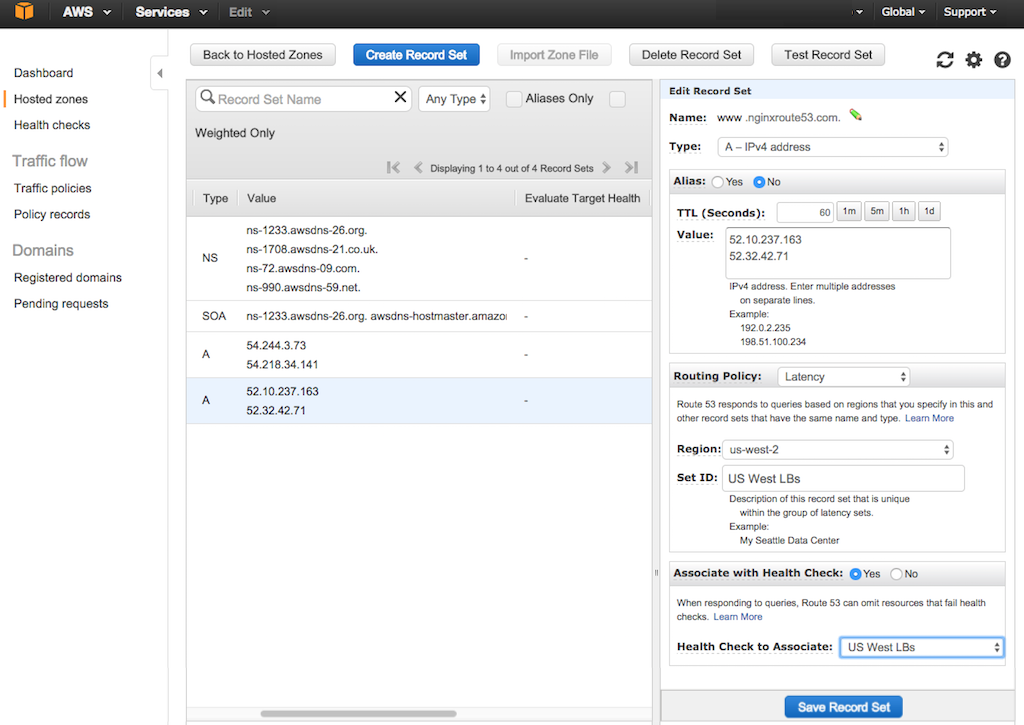

4. Fill in the fields in the **Create Record Set** column:

- **Name** – You can leave this field blank, but for this guide we are setting the name to **www.nginxroute53.com**.

- - **Type** – **A – IPv4 address**.

+ - **Type** – **A {{

@@ -124,19 +124,19 @@ Create records sets for your domain:

4. Fill in the fields in the **Create Record Set** column:

- **Name** – You can leave this field blank, but for this guide we are setting the name to **www.nginxroute53.com**.

- - **Type** – **A – IPv4 address**.

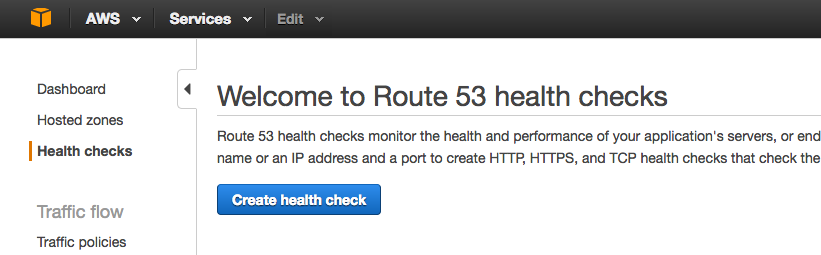

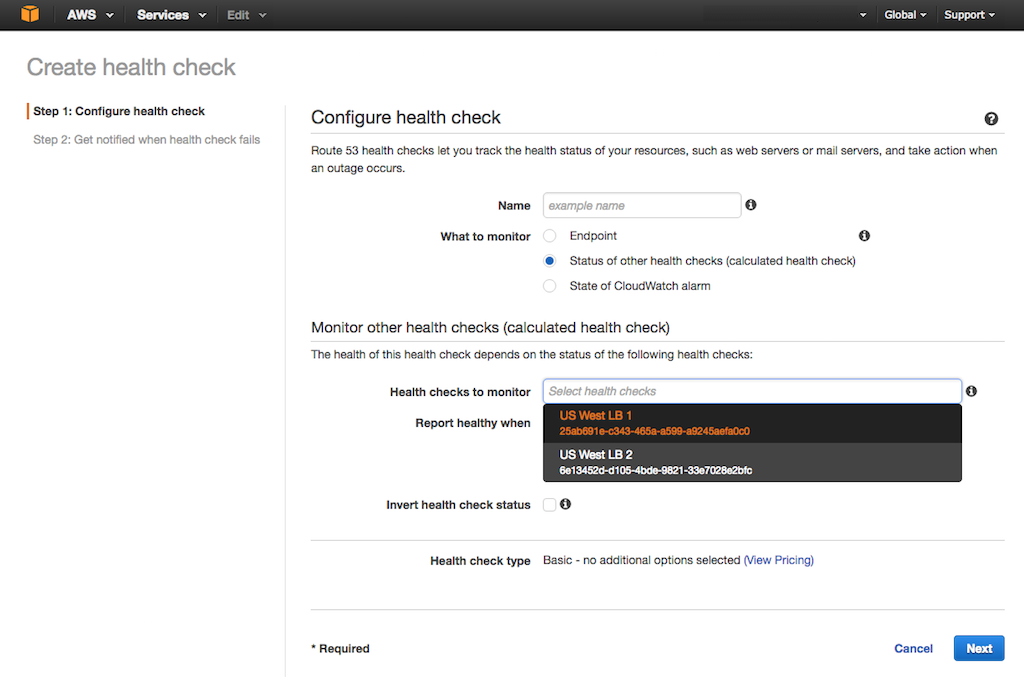

+ - **Type** – **A {{ -2. Click the Create health check button. In the **Configure health check** form that opens, specify the following values, then click the Next button.

+2. Click the Create health check button. In the {{

-2. Click the Create health check button. In the **Configure health check** form that opens, specify the following values, then click the Next button.

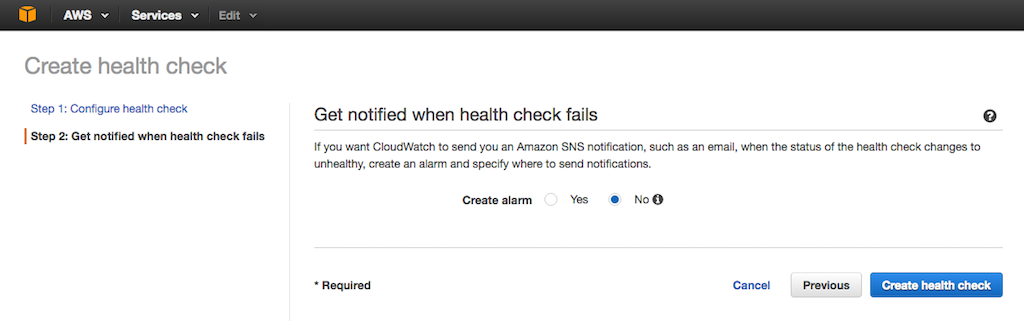

+2. Click the Create health check button. In the {{ -3. On the **Get notified when health check fails** screen that opens, set the **Create alarm** radio button to **Yes** or **No** as appropriate, then click the Create health check button.

+3. On the {{

-3. On the **Get notified when health check fails** screen that opens, set the **Create alarm** radio button to **Yes** or **No** as appropriate, then click the Create health check button.

+3. On the {{ -4. Repeat Steps 2 and 3 for your other NGINX Plus load balancers (in this guide, **US West LB 2**, **US East LB 1**, and **US East LB 2**).

+4. Repeat Steps 2 and 3 for your other NGINX Plus load balancers (in this guide, {{

-4. Repeat Steps 2 and 3 for your other NGINX Plus load balancers (in this guide, **US West LB 2**, **US East LB 1**, and **US East LB 2**).

+4. Repeat Steps 2 and 3 for your other NGINX Plus load balancers (in this guide, {{ -3. On the **Get notified when health check fails** screen that opens, set the **Create alarm** radio button as appropriate (see Step 5 in the previous section), then click the Create health check button.

+3. On the {{

-3. On the **Get notified when health check fails** screen that opens, set the **Create alarm** radio button as appropriate (see Step 5 in the previous section), then click the Create health check button.

+3. On the {{ 4. Change the **Associate with Health Check** radio button to **Yes**.

-5. In the **Health Check to Associate** field, select the paired health check for your first region (in this guide, **US West LBs**).

+5. In the **Health Check to Associate** field, select the paired health check for your first region (in this guide, {{

4. Change the **Associate with Health Check** radio button to **Yes**.

-5. In the **Health Check to Associate** field, select the paired health check for your first region (in this guide, **US West LBs**).

+5. In the **Health Check to Associate** field, select the paired health check for your first region (in this guide, {{ @@ -366,7 +366,7 @@ After you complete the instructions on all instances, the list for a region (her

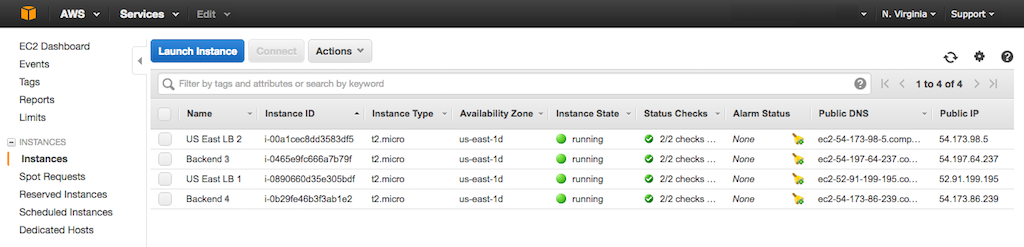

### Configuring NGINX Open Source on the Backend Servers

-Perform these steps on all four backend servers: **Backend 1**, **Backend 2**, **Backend 3**, and **Backend 4**. In Step 3, substitute the appropriate name for `Backend X` in the **index.html** file.

+Perform these steps on all four backend servers: {{

@@ -366,7 +366,7 @@ After you complete the instructions on all instances, the list for a region (her

### Configuring NGINX Open Source on the Backend Servers

-Perform these steps on all four backend servers: **Backend 1**, **Backend 2**, **Backend 3**, and **Backend 4**. In Step 3, substitute the appropriate name for `Backend X` in the **index.html** file.

+Perform these steps on all four backend servers: {{ -3. The **Shed Load** filter is added as the fourth (lowest) box in the **Active Filters** section. Move it to be third by clicking and dragging it above the **Select First N** box.

+3. The **Shed Load** filter is added as the fourth (lowest) box in the **Active Filters** section. Move it to be third by clicking and dragging it above the {{

-3. The **Shed Load** filter is added as the fourth (lowest) box in the **Active Filters** section. Move it to be third by clicking and dragging it above the **Select First N** box.

+3. The **Shed Load** filter is added as the fourth (lowest) box in the **Active Filters** section. Move it to be third by clicking and dragging it above the {{ - - If there are existing projects, the name of one of them appears in the upper left of the blue header bar (in the screenshot, it's My Test Project ). Click the project name and select **Create project** from the menu that opens.

+ - If there are existing projects, the name of one of them appears in the upper left of the blue header bar (in the screenshot, it's My Test Project ). Click the project name and select {{

- - If there are existing projects, the name of one of them appears in the upper left of the blue header bar (in the screenshot, it's My Test Project ). Click the project name and select **Create project** from the menu that opens.

+ - If there are existing projects, the name of one of them appears in the upper left of the blue header bar (in the screenshot, it's My Test Project ). Click the project name and select {{ -3. Type your project name in the **New Project** window that pops up, then click CREATE. We're naming the project **NGINX Plus All‑Active‑LB**.

+3. Type your project name in the {{

-3. Type your project name in the **New Project** window that pops up, then click CREATE. We're naming the project **NGINX Plus All‑Active‑LB**.

+3. Type your project name in the {{ @@ -87,24 +87,24 @@ Create a new GCE project to host the all‑active NGINX Plus deployment.

Create firewall rules that allow access to the HTTP and HTTPS ports on your GCE instances. You'll attach the rules to all the instances you create for the deployment.

-1. Navigate to the **Networking > Firewall rules** tab and click + CREATE FIREWALL RULE. (The screenshot shows the default rules provided by GCE.)

+1. Navigate to the {{

@@ -87,24 +87,24 @@ Create a new GCE project to host the all‑active NGINX Plus deployment.

Create firewall rules that allow access to the HTTP and HTTPS ports on your GCE instances. You'll attach the rules to all the instances you create for the deployment.

-1. Navigate to the **Networking > Firewall rules** tab and click + CREATE FIREWALL RULE. (The screenshot shows the default rules provided by GCE.)

+1. Navigate to the {{ -2. Fill in the fields on the **Create a firewall rule** screen that opens:

+2. Fill in the fields on the {{

-2. Fill in the fields on the **Create a firewall rule** screen that opens:

+2. Fill in the fields on the {{ -3. Click the Create button. The new rule is added to the table on the **Firewall rules** tab.

+3. Click the Create button. The new rule is added to the table on the {{

-3. Click the Create button. The new rule is added to the table on the **Firewall rules** tab.

+3. Click the Create button. The new rule is added to the table on the {{ - - **Identity and API access** – Keep the defaults for the **Service account ** field and **Access scopes** radio button. Unless you need more granular control.

+ - {{

- - **Identity and API access** – Keep the defaults for the **Service account ** field and **Access scopes** radio button. Unless you need more granular control.

+ - {{ @@ -166,38 +166,38 @@ Create three source VM instances based on a GCE VM image. We're basing our insta

@@ -166,38 +166,38 @@ Create three source VM instances based on a GCE VM image. We're basing our insta

-5. On the **Networking** subtab, verify the default settings, in particular **Ephemeral** for **External IP** and **Off** for **IP Forwarding**.

+5. On the **Networking** subtab, verify the default settings, in particular **Ephemeral** for {{

-5. On the **Networking** subtab, verify the default settings, in particular **Ephemeral** for **External IP** and **Off** for **IP Forwarding**.

+5. On the **Networking** subtab, verify the default settings, in particular **Ephemeral** for {{ -6. If you're using your own SSH public key instead of your default GCE keys, paste the hexadecimal key string on the **SSH Keys** subtab. Right into the box that reads **Enter entire key data**.

+6. If you're using your own SSH public key instead of your default GCE keys, paste the hexadecimal key string on the {{

-6. If you're using your own SSH public key instead of your default GCE keys, paste the hexadecimal key string on the **SSH Keys** subtab. Right into the box that reads **Enter entire key data**.

+6. If you're using your own SSH public key instead of your default GCE keys, paste the hexadecimal key string on the {{ -7. Click the Create button at the bottom of the **Create an instance** page.

+7. Click the Create button at the bottom of the {{

-7. Click the Create button at the bottom of the **Create an instance** page.

+7. Click the Create button at the bottom of the {{ #### Creating the Second Application Instance from a VM Image

-1. On the **VM instances** summary page, click CREATE INSTANCE.

+1. On the {{

#### Creating the Second Application Instance from a VM Image

-1. On the **VM instances** summary page, click CREATE INSTANCE.

+1. On the {{ @@ -253,7 +253,7 @@ Now install NGINX Plus and download files that are specific to the all‑active

Both the configuration and content files are available at the [NGINX GitHub repository](https://github.com/nginxinc/NGINX-Demos/tree/master/gce-nginx-plus-deployment-guide-files).

-Repeat these instructions for all three source instances (**nginx‑plus‑app‑1**, **nginx‑plus‑app‑2**, and **nginx‑plus‑lb**).

+Repeat these instructions for all three source instances ({{

@@ -253,7 +253,7 @@ Now install NGINX Plus and download files that are specific to the all‑active

Both the configuration and content files are available at the [NGINX GitHub repository](https://github.com/nginxinc/NGINX-Demos/tree/master/gce-nginx-plus-deployment-guide-files).

-Repeat these instructions for all three source instances (**nginx‑plus‑app‑1**, **nginx‑plus‑app‑2**, and **nginx‑plus‑lb**).

+Repeat these instructions for all three source instances ({{ @@ -392,25 +392,25 @@ Create three source instances based on a prebuilt NGINX Plus image running on <

@@ -392,25 +392,25 @@ Create three source instances based on a prebuilt NGINX Plus image running on <

-7. Navigate to the **Compute Engine > VM instances** tab and click **nginx‑plus‑app‑1‑vm** in the Name column in the table. (The **‑vm** suffix is added automatically to the name of the newly created instance.)

+7. Navigate to the {{

-7. Navigate to the **Compute Engine > VM instances** tab and click **nginx‑plus‑app‑1‑vm** in the Name column in the table. (The **‑vm** suffix is added automatically to the name of the newly created instance.)

+7. Navigate to the {{ -8. On the **VM instances** page that opens, click EDIT at the top of the page. In fields that can be edited, the value changes from static text to text boxes, drop‑down menus, and checkboxes.

+8. On the {{

-8. On the **VM instances** page that opens, click EDIT at the top of the page. In fields that can be edited, the value changes from static text to text boxes, drop‑down menus, and checkboxes.

+8. On the {{ -2. Click **nginx‑plus‑app‑1‑vm** in the Name column of the table (shown in the screenshot in Step 7 of Creating the First Application Instance).

+2. Click {{

-2. Click **nginx‑plus‑app‑1‑vm** in the Name column of the table (shown in the screenshot in Step 7 of Creating the First Application Instance).

+2. Click {{ - - On the **VM instances** page that opens, click EDIT at the top of the page. In fields that can be edited, the value changes from static text to text boxes, drop‑down menus, and checkboxes.

- - In the **Boot disk and local disks** field, uncheck the checkbox labeled **Delete boot disk when instance is deleted**.

+ - On the {{

- - On the **VM instances** page that opens, click EDIT at the top of the page. In fields that can be edited, the value changes from static text to text boxes, drop‑down menus, and checkboxes.

- - In the **Boot disk and local disks** field, uncheck the checkbox labeled **Delete boot disk when instance is deleted**.

+ - On the {{ - - Select **NGINX Plus All‑Active‑LB** from the drop-down menu labeled **Show images from**.

+ - Select **NGINX {{

- - Select **NGINX Plus All‑Active‑LB** from the drop-down menu labeled **Show images from**.

+ - Select **NGINX {{ @@ -728,11 +728,11 @@ Create _instance templates_. They are the compute workloads in instance groups.

@@ -728,11 +728,11 @@ Create _instance templates_. They are the compute workloads in instance groups.

-8. On the **Networking** subtab, verify the default settings of **Ephemeral** for **External IP** and **Off** for **IP Forwarding**.

+8. On the **Networking** subtab, verify the default settings of **Ephemeral** for {{

-8. On the **Networking** subtab, verify the default settings of **Ephemeral** for **External IP** and **Off** for **IP Forwarding**.

+8. On the **Networking** subtab, verify the default settings of **Ephemeral** for {{ -9. If you're using your own SSH public key instead of your default keys, paste the hexadecimal key string on the **SSH Keys** subtab. Right into the box that reads **Enter entire key data**.

+9. If you're using your own SSH public key instead of your default keys, paste the hexadecimal key string on the {{

-9. If you're using your own SSH public key instead of your default keys, paste the hexadecimal key string on the **SSH Keys** subtab. Right into the box that reads **Enter entire key data**.

+9. If you're using your own SSH public key instead of your default keys, paste the hexadecimal key string on the {{ @@ -741,25 +741,25 @@ Create _instance templates_. They are the compute workloads in instance groups.

### Creating the Second Application Instance Template

-1. On the **Instance templates** summary page, click CREATE INSTANCE TEMPLATE.

+1. On the {{

@@ -741,25 +741,25 @@ Create _instance templates_. They are the compute workloads in instance groups.

### Creating the Second Application Instance Template

-1. On the **Instance templates** summary page, click CREATE INSTANCE TEMPLATE.

+1. On the {{ -6. Navigate to the **Networking > Load balancing** tab.

+6. Navigate to the {{

-6. Navigate to the **Networking > Load balancing** tab.

+6. Navigate to the {{ -13. Select **Frontend configuration** in the left column. This opens up the **Frontend configuration** interface on the right column.

+13. Select {{

-13. Select **Frontend configuration** in the left column. This opens up the **Frontend configuration** interface on the right column.

+13. Select {{ -4. On the **lb | Backend Pools** page that opens, click **+ Add** in the upper left corner of the main pane.

+4. On the {{

-4. On the **lb | Backend Pools** page that opens, click **+ Add** in the upper left corner of the main pane.

+4. On the {{ After a few moments the virtual machines appear in the new backend pool.

-6. Click **Health probes** in the left navigation column, and then **+ Add** in the upper left corner of the main pane on the **lb | Health probes** page that opens.

+6. Click **Health probes** in the left navigation column, and then **+ Add** in the upper left corner of the main pane on the {{

After a few moments the virtual machines appear in the new backend pool.

-6. Click **Health probes** in the left navigation column, and then **+ Add** in the upper left corner of the main pane on the **lb | Health probes** page that opens.

+6. Click **Health probes** in the left navigation column, and then **+ Add** in the upper left corner of the main pane on the {{ - After a few moments the new probe appears in the table on the **lb | Health probes** page. This probe queries the NGINX Plus landing page every five seconds to check whether NGINX Plus is running.

+ After a few moments the new probe appears in the table on the {{

- After a few moments the new probe appears in the table on the **lb | Health probes** page. This probe queries the NGINX Plus landing page every five seconds to check whether NGINX Plus is running.

+ After a few moments the new probe appears in the table on the {{ - After a few moments the new rule appears in the table on the **lb | Load balancing rules** page.

+ After a few moments the new rule appears in the table on the {{

- After a few moments the new rule appears in the table on the **lb | Load balancing rules** page.

+ After a few moments the new rule appears in the table on the {{ @@ -210,7 +210,7 @@ Once you’ve tested that the Standard Load Balancer has been correctly deployed

In this case, you need to set up Azure Traffic Manager for DNS‑based global server load balancing (GSLB) among the regions. The involves creating a DNS name for the Standard Load Balancer and registering it as an endpoint in Traffic Manager.

-1. Navigate to the **Public IP addresses** page. (One way is to enter **Public IP addresses** in the search field of the Azure title bar and select that value in the **Services** section of the resulting drop‑down menu.)

+1. Navigate to the {{

@@ -210,7 +210,7 @@ Once you’ve tested that the Standard Load Balancer has been correctly deployed

In this case, you need to set up Azure Traffic Manager for DNS‑based global server load balancing (GSLB) among the regions. The involves creating a DNS name for the Standard Load Balancer and registering it as an endpoint in Traffic Manager.

-1. Navigate to the **Public IP addresses** page. (One way is to enter **Public IP addresses** in the search field of the Azure title bar and select that value in the **Services** section of the resulting drop‑down menu.)

+1. Navigate to the {{ -5. Navigate to the **Traffic Manager profiles** tab. (One way is to enter **Traffic Manager profiles** in the search field of the Azure title bar and select that value in the **Services** section of the resulting drop‑down menu.)

+5. Navigate to the {{

-5. Navigate to the **Traffic Manager profiles** tab. (One way is to enter **Traffic Manager profiles** in the search field of the Azure title bar and select that value in the **Services** section of the resulting drop‑down menu.)

+5. Navigate to the {{ -8. It takes a few moments to create the profile. When it appears in the table on the **Traffic Manager profiles** page, click its name in the **Name** column.

+8. It takes a few moments to create the profile. When it appears in the table on the {{

-8. It takes a few moments to create the profile. When it appears in the table on the **Traffic Manager profiles** page, click its name in the **Name** column.

+8. It takes a few moments to create the profile. When it appears in the table on the {{ @@ -75,7 +75,7 @@ In addition, to install NGINX software by following the linked instructions, you

For simplicity, we recommend allocating **Standard** public IP addresses for all six VMs used in the deployment. At the time of initial publication of this guide, the hourly cost for six such VMs was only $0.008 more than for six VMs with Basic addresses; for current pricing, see the [Microsoft documentation](https://azure.microsoft.com/en-us/pricing/details/ip-addresses/).

- To allocate a **Standard** public IP address, open the **Networking** tab on the **Create a virtual machine** window. Click Create new below the **Public IP** field. In the **Create public IP address** column that opens at right, click the **Standard** radio button under **SKU**. You can change the value in the **Name** field; here we are accepting the default created by Azure, **ngx‑plus‑1‑ip**. Click the ** OK ** button.

+ To allocate a **Standard** public IP address, open the **Networking** tab on the **Create a virtual machine** window. Click Create new below the **Public IP** field. In the {{

@@ -75,7 +75,7 @@ In addition, to install NGINX software by following the linked instructions, you

For simplicity, we recommend allocating **Standard** public IP addresses for all six VMs used in the deployment. At the time of initial publication of this guide, the hourly cost for six such VMs was only $0.008 more than for six VMs with Basic addresses; for current pricing, see the [Microsoft documentation](https://azure.microsoft.com/en-us/pricing/details/ip-addresses/).

- To allocate a **Standard** public IP address, open the **Networking** tab on the **Create a virtual machine** window. Click Create new below the **Public IP** field. In the **Create public IP address** column that opens at right, click the **Standard** radio button under **SKU**. You can change the value in the **Name** field; here we are accepting the default created by Azure, **ngx‑plus‑1‑ip**. Click the ** OK ** button.

+ To allocate a **Standard** public IP address, open the **Networking** tab on the **Create a virtual machine** window. Click Create new below the **Public IP** field. In the {{ @@ -87,7 +87,7 @@ In addition, to install NGINX software by following the linked instructions, you

To change any settings, open the appropriate tab. If the settings are correct, click the Create button.

- If you chose in [Step 4](#create-vm_Basics) to generate a new key pair, a **Generate new key pair** window pops up. Click the Download key and create private resource button.

+ If you chose in [Step 4](#create-vm_Basics) to generate a new key pair, a {{

@@ -87,7 +87,7 @@ In addition, to install NGINX software by following the linked instructions, you

To change any settings, open the appropriate tab. If the settings are correct, click the Create button.

- If you chose in [Step 4](#create-vm_Basics) to generate a new key pair, a **Generate new key pair** window pops up. Click the Download key and create private resource button.

+ If you chose in [Step 4](#create-vm_Basics) to generate a new key pair, a {{ @@ -107,7 +107,7 @@ In addition, to install NGINX software by following the linked instructions, you

For **ngx-plus-2**, it is probably simplest to repeat Steps 2 through 6 above (or purchase a second prebuilt VM in the [Microsoft Azure Marketplace](https://azuremarketplace.microsoft.com/en-us/marketplace/apps?search=NGINX%20Plus)).

- For the NGINX Open Source VMs, you can create them individually using Steps 2 through 6. Alternatively, create them based on an Azure image. To do so, follow Steps 2 through 6 above to create a source VM (naming it **nginx‑oss**), [install the NGINX Open Source software](#install-nginx) on it, and then follow the instructions in [Optional: Creating an NGINX Open Source Image](#create-nginx-oss-image).

+ For the NGINX Open Source VMs, you can create them individually using Steps 2 through 6. Alternatively, create them based on an Azure image. To do so, follow Steps 2 through 6 above to create a source VM (naming it {{

@@ -107,7 +107,7 @@ In addition, to install NGINX software by following the linked instructions, you

For **ngx-plus-2**, it is probably simplest to repeat Steps 2 through 6 above (or purchase a second prebuilt VM in the [Microsoft Azure Marketplace](https://azuremarketplace.microsoft.com/en-us/marketplace/apps?search=NGINX%20Plus)).

- For the NGINX Open Source VMs, you can create them individually using Steps 2 through 6. Alternatively, create them based on an Azure image. To do so, follow Steps 2 through 6 above to create a source VM (naming it **nginx‑oss**), [install the NGINX Open Source software](#install-nginx) on it, and then follow the instructions in [Optional: Creating an NGINX Open Source Image](#create-nginx-oss-image).

+ For the NGINX Open Source VMs, you can create them individually using Steps 2 through 6. Alternatively, create them based on an Azure image. To do so, follow Steps 2 through 6 above to create a source VM (naming it {{ -2. On the page that opens (**ngx‑plus‑1** in this guide), note the VM's public IP address (in the **Public IP address** field in the right column).

+2. On the page that opens ({{

-2. On the page that opens (**ngx‑plus‑1** in this guide), note the VM's public IP address (in the **Public IP address** field in the right column).

+2. On the page that opens ({{ @@ -130,7 +130,7 @@ To install and configure NGINX Open Source or NGINX Plus on a VM, you need to o

where

- - `

@@ -130,7 +130,7 @@ To install and configure NGINX Open Source or NGINX Plus on a VM, you need to o

where

- - ` diff --git a/content/nginx/deployment-guides/single-sign-on/oidc-njs/active-directory-federation-services.md b/content/nginx/deployment-guides/single-sign-on/oidc-njs/active-directory-federation-services.md

index 870198ac6..6d0173780 100644

--- a/content/nginx/deployment-guides/single-sign-on/oidc-njs/active-directory-federation-services.md

+++ b/content/nginx/deployment-guides/single-sign-on/oidc-njs/active-directory-federation-services.md

@@ -50,9 +50,9 @@ The instructions assume you have the following:

Create an AD FS application for NGINX Plus:

-1. Open the AD FS Management window. In the navigation column on the left, right‑click on the **Application Groups** folder and select **Add Application Group** from the drop‑down menu.

+1. Open the AD FS Management window. In the navigation column on the left, right‑click on the **Application Groups** folder and select {{

diff --git a/content/nginx/deployment-guides/single-sign-on/oidc-njs/active-directory-federation-services.md b/content/nginx/deployment-guides/single-sign-on/oidc-njs/active-directory-federation-services.md

index 870198ac6..6d0173780 100644

--- a/content/nginx/deployment-guides/single-sign-on/oidc-njs/active-directory-federation-services.md

+++ b/content/nginx/deployment-guides/single-sign-on/oidc-njs/active-directory-federation-services.md

@@ -50,9 +50,9 @@ The instructions assume you have the following:

Create an AD FS application for NGINX Plus:

-1. Open the AD FS Management window. In the navigation column on the left, right‑click on the **Application Groups** folder and select **Add Application Group** from the drop‑down menu.

+1. Open the AD FS Management window. In the navigation column on the left, right‑click on the **Application Groups** folder and select {{- 2. In the **Redirect URI** field, type the URI of the NGINX Plus instance including the port number, and ending in **/\_codexch**. Here we’re using **https://my‑nginx.example.com:443/\_codexch**. Click the Add button. + 2. In the **Redirect URI** field, type the URI of the NGINX Plus instance including the port number, and ending in **/\_codexch**. Here we’re using {{

-4. In the **Configure Application Credentials** step, click the **Generate a shared secret** checkbox. Make a note of the secret that AD FS generates (perhaps by clicking the **Copy to clipboard** button and pasting the clipboard content into a file). You will add the secret to the NGINX Plus configuration in [Step 4 of _Configuring NGINX Plus_](#nginx-plus-variables). Click the Next > button.

+4. In the {{

-4. In the **Configure Application Credentials** step, click the **Generate a shared secret** checkbox. Make a note of the secret that AD FS generates (perhaps by clicking the **Copy to clipboard** button and pasting the clipboard content into a file). You will add the secret to the NGINX Plus configuration in [Step 4 of _Configuring NGINX Plus_](#nginx-plus-variables). Click the Next > button.

+4. In the {{ @@ -87,7 +87,7 @@ Create an AD FS application for NGINX Plus:

Configure NGINX Plus as the OpenID Connect relying party:

-1. Create a clone of the [**nginx‑openid‑connect**](https://github.com/nginxinc/nginx-openid-connect) GitHub repository.

+1. Create a clone of the {{

@@ -87,7 +87,7 @@ Create an AD FS application for NGINX Plus:

Configure NGINX Plus as the OpenID Connect relying party:

-1. Create a clone of the [**nginx‑openid‑connect**](https://github.com/nginxinc/nginx-openid-connect) GitHub repository.

+1. Create a clone of the {{ -3. In the **Create a user pool** window that opens, type a value in the **Pool name** field (in this guide, it's **nginx‑plus‑pool**), then click the Review defaults button.

+3. In the **Create a user pool** window that opens, type a value in the **Pool name** field (in this guide, it's {{

-3. In the **Create a user pool** window that opens, type a value in the **Pool name** field (in this guide, it's **nginx‑plus‑pool**), then click the Review defaults button.

+3. In the **Create a user pool** window that opens, type a value in the **Pool name** field (in this guide, it's {{ @@ -70,11 +70,11 @@ Create a new application for NGINX Plus in the Cognito GUI:

5. On the **App clients** tab which opens, click Add an app client.

-6. On the **Which app clients will have access to this user pool?** window which opens, enter a value (in this guide, **nginx‑plus‑app**) in the **App client name** field. Make sure the **Generate client secret** box is checked, then click the Create app client button.

+6. On the **Which app clients will have access to this user pool?** window which opens, enter a value (in this guide, {{

@@ -70,11 +70,11 @@ Create a new application for NGINX Plus in the Cognito GUI:

5. On the **App clients** tab which opens, click Add an app client.

-6. On the **Which app clients will have access to this user pool?** window which opens, enter a value (in this guide, **nginx‑plus‑app**) in the **App client name** field. Make sure the **Generate client secret** box is checked, then click the Create app client button.

+6. On the **Which app clients will have access to this user pool?** window which opens, enter a value (in this guide, {{ -7. On the confirmation page which opens, click **Return to pool details** to return to the **Review** tab. On that tab click the Create pool button at the bottom. (The screenshot in [Step 4](#cognito-review-tab) shows the button.)

+7. On the confirmation page which opens, click {{

-7. On the confirmation page which opens, click **Return to pool details** to return to the **Review** tab. On that tab click the Create pool button at the bottom. (The screenshot in [Step 4](#cognito-review-tab) shows the button.)

+7. On the confirmation page which opens, click {{ -9. Click **Users and groups** in the left navigation column. In the interface that opens, designate the users (or group of users, on the **Groups** tab) who will be able to use SSO for the app being proxied by NGINX Plus. For instructions, see the Cognito documentation about [creating users](https://docs.aws.amazon.com/cognito/latest/developerguide/how-to-create-user-accounts.html), [importing users](https://docs.aws.amazon.com/cognito/latest/developerguide/cognito-user-pools-using-import-tool.html), or [adding a group](https://docs.aws.amazon.com/cognito/latest/developerguide/cognito-user-pools-user-groups.html).

+9. Click {{

-9. Click **Users and groups** in the left navigation column. In the interface that opens, designate the users (or group of users, on the **Groups** tab) who will be able to use SSO for the app being proxied by NGINX Plus. For instructions, see the Cognito documentation about [creating users](https://docs.aws.amazon.com/cognito/latest/developerguide/how-to-create-user-accounts.html), [importing users](https://docs.aws.amazon.com/cognito/latest/developerguide/cognito-user-pools-using-import-tool.html), or [adding a group](https://docs.aws.amazon.com/cognito/latest/developerguide/cognito-user-pools-user-groups.html).

+9. Click {{ -10. Click **App clients** in the left navigation bar. On the tab that opens, click the Show Details button in the box labeled with the app client name (in this guide, **nginx‑plus‑app**).

+10. Click **App clients** in the left navigation bar. On the tab that opens, click the Show Details button in the box labeled with the app client name (in this guide, {{

-10. Click **App clients** in the left navigation bar. On the tab that opens, click the Show Details button in the box labeled with the app client name (in this guide, **nginx‑plus‑app**).

+10. Click **App clients** in the left navigation bar. On the tab that opens, click the Show Details button in the box labeled with the app client name (in this guide, {{ -11. On the details page that opens, make note of the values in the **App client id** and **App client secret** fields. You will add them to the NGINX Plus configuration in [Step 3 of _Configuring NGINX Plus_](#nginx-plus-variables).

+11. On the details page that opens, make note of the values in the {{

-11. On the details page that opens, make note of the values in the **App client id** and **App client secret** fields. You will add them to the NGINX Plus configuration in [Step 3 of _Configuring NGINX Plus_](#nginx-plus-variables).

+11. On the details page that opens, make note of the values in the {{ -12. Click **App client settings** in the left navigation column. In the tab that opens, perform the following steps:

+12. Click {{

-12. Click **App client settings** in the left navigation column. In the tab that opens, perform the following steps:

+12. Click {{ -13. Click **Domain name** in the left navigation column. In the tab that opens, type a domain prefix in the **Domain prefix** field under **Amazon Cognito domain** (in this guide, **my‑nginx‑plus**). Click the Save changes button.

+13. Click **Domain name** in the left navigation column. In the tab that opens, type a domain prefix in the **Domain prefix** field under {{

-13. Click **Domain name** in the left navigation column. In the tab that opens, type a domain prefix in the **Domain prefix** field under **Amazon Cognito domain** (in this guide, **my‑nginx‑plus**). Click the Save changes button.

+13. Click **Domain name** in the left navigation column. In the tab that opens, type a domain prefix in the **Domain prefix** field under {{ @@ -120,7 +120,7 @@ Create a new application for NGINX Plus in the Cognito GUI:

Configure NGINX Plus as the OpenID Connect relying party:

-1. Create a clone of the [**nginx‑openid‑connect**](https://github.com/nginxinc/nginx-openid-connect) GitHub repository.

+1. Create a clone of the {{

@@ -120,7 +120,7 @@ Create a new application for NGINX Plus in the Cognito GUI:

Configure NGINX Plus as the OpenID Connect relying party:

-1. Create a clone of the [**nginx‑openid‑connect**](https://github.com/nginxinc/nginx-openid-connect) GitHub repository.

+1. Create a clone of the {{ 4. On the **NGINX Plus** page that opens, enter or select these values on the Settings tab:

- **Access Type** – **confidential**

- - **Valid Redirect URIs** – The URI of the NGINX Plus instance, including the port number, and ending in **/\_codexch** (in this guide it is **https://my‑nginx.example.com:443/_codexch**)

+ - **Valid Redirect URIs** – The URI of the NGINX Plus instance, including the port number, and ending in **/\_codexch** (in this guide it is {{

4. On the **NGINX Plus** page that opens, enter or select these values on the Settings tab:

- **Access Type** – **confidential**

- - **Valid Redirect URIs** – The URI of the NGINX Plus instance, including the port number, and ending in **/\_codexch** (in this guide it is **https://my‑nginx.example.com:443/_codexch**)

+ - **Valid Redirect URIs** – The URI of the NGINX Plus instance, including the port number, and ending in **/\_codexch** (in this guide it is {{ 8. In the left navigation column, click **Users**. On the **Users** page that opens, either click the name of an existing user, or click the **Add user** button in the upper right corner to create a new user. For complete instructions, see the [Keycloak documentation](https://www.keycloak.org/docs/latest/server_admin/index.html#user-management).

-9. On the management page for the user (here, **user01**), click the Role Mappings tab. On the page that opens, select **NGINX‑Plus** on the **Client Roles** drop‑down menu. Click **nginx‑keycloak‑role** in the **Available Roles** box, then click the **Add selected** button below the box. The role then appears in the **Assigned Roles** and **Effective Roles** boxes, as shown in the screenshot.

+9. On the management page for the user (here, **user01**), click the Role Mappings tab. On the page that opens, select {{

8. In the left navigation column, click **Users**. On the **Users** page that opens, either click the name of an existing user, or click the **Add user** button in the upper right corner to create a new user. For complete instructions, see the [Keycloak documentation](https://www.keycloak.org/docs/latest/server_admin/index.html#user-management).

-9. On the management page for the user (here, **user01**), click the Role Mappings tab. On the page that opens, select **NGINX‑Plus** on the **Client Roles** drop‑down menu. Click **nginx‑keycloak‑role** in the **Available Roles** box, then click the **Add selected** button below the box. The role then appears in the **Assigned Roles** and **Effective Roles** boxes, as shown in the screenshot.

+9. On the management page for the user (here, **user01**), click the Role Mappings tab. On the page that opens, select {{ @@ -101,7 +101,7 @@ Create a Keycloak client for NGINX Plus in the Keycloak GUI:

Configure NGINX Plus as the OpenID Connect relying party:

-1. Create a clone of the [**nginx‑openid‑connect**](https://github.com/nginxinc/nginx-openid-connect) GitHub repository.

+1. Create a clone of the {{

@@ -101,7 +101,7 @@ Create a Keycloak client for NGINX Plus in the Keycloak GUI:

Configure NGINX Plus as the OpenID Connect relying party:

-1. Create a clone of the [**nginx‑openid‑connect**](https://github.com/nginxinc/nginx-openid-connect) GitHub repository.

+1. Create a clone of the {{ -3. On the **Find Applications** page that opens, type **OpenID Connect** in the search box. Click on the **OpenID Connect (OIDC)** row that appears.

+3. On the **Find Applications** page that opens, type {{

-3. On the **Find Applications** page that opens, type **OpenID Connect** in the search box. Click on the **OpenID Connect (OIDC)** row that appears.

+3. On the **Find Applications** page that opens, type {{ -4. On the **Add OpenId Connect (OIDC)** page that opens, change the value in the **Display Name** field to **NGINX Plus** and click the Save button.

+4. On the **Add OpenId Connect (OIDC)** page that opens, change the value in the **Display Name** field to {{

-4. On the **Add OpenId Connect (OIDC)** page that opens, change the value in the **Display Name** field to **NGINX Plus** and click the Save button.

+4. On the **Add OpenId Connect (OIDC)** page that opens, change the value in the **Display Name** field to {{ -5. When the save completes, a new set of choices appears in the left navigation bar. Click **Configuration**. In the **Redirect URI's** field, type the URI of the NGINX Plus instance including the port number, and ending in **/\_codexch** (in this guide it is **https://my‑nginx.example.com:443/_codexch**). Then click the Save button.

+5. When the save completes, a new set of choices appears in the left navigation bar. Click **Configuration**. In the **Redirect URI's** field, type the URI of the NGINX Plus instance including the port number, and ending in **/\_codexch** (in this guide it is {{

-5. When the save completes, a new set of choices appears in the left navigation bar. Click **Configuration**. In the **Redirect URI's** field, type the URI of the NGINX Plus instance including the port number, and ending in **/\_codexch** (in this guide it is **https://my‑nginx.example.com:443/_codexch**). Then click the Save button.

+5. When the save completes, a new set of choices appears in the left navigation bar. Click **Configuration**. In the **Redirect URI's** field, type the URI of the NGINX Plus instance including the port number, and ending in **/\_codexch** (in this guide it is {{ -6. When the save completes, click **SSO** in the left navigation bar. Click **Show client secret** below the **Client Secret** field. Record the values in the **Client ID** and **Client Secret** fields. You will add them to the NGINX Plus configuration in [Step 4 of _Configuring NGINX Plus_](#nginx-plus-variables).

+6. When the save completes, click **SSO** in the left navigation bar. Click {{

-6. When the save completes, click **SSO** in the left navigation bar. Click **Show client secret** below the **Client Secret** field. Record the values in the **Client ID** and **Client Secret** fields. You will add them to the NGINX Plus configuration in [Step 4 of _Configuring NGINX Plus_](#nginx-plus-variables).

+6. When the save completes, click **SSO** in the left navigation bar. Click {{ -7. Assign users to the application (in this guide, **NGINX Plus**) to enable them to access it for SSO. OneLogin recommends using [roles](https://onelogin.service-now.com/kb_view_customer.do?sysparm_article=KB0010606) for this purpose. You can access the **Roles** page under Users in the title bar.

+7. Assign users to the application (in this guide, {{

-7. Assign users to the application (in this guide, **NGINX Plus**) to enable them to access it for SSO. OneLogin recommends using [roles](https://onelogin.service-now.com/kb_view_customer.do?sysparm_article=KB0010606) for this purpose. You can access the **Roles** page under Users in the title bar.

+7. Assign users to the application (in this guide, {{ diff --git a/content/nginx/deployment-guides/single-sign-on/oidc-njs/ping-identity.md b/content/nginx/deployment-guides/single-sign-on/oidc-njs/ping-identity.md

index d4901c65a..495b2ebad 100644

--- a/content/nginx/deployment-guides/single-sign-on/oidc-njs/ping-identity.md

+++ b/content/nginx/deployment-guides/single-sign-on/oidc-njs/ping-identity.md

@@ -56,30 +56,30 @@ Create a new application for NGINX Plus:

1. Log in to your Ping Identity account. The administrative dashboard opens automatically. In this guide, we show the PingOne for Enterprise dashboard, and for brevity refer simply to ”PingOne”.

-2. Click APPLICATIONS in the title bar, and on the **My Applications** page that opens, click **OIDC** and then the **+ Add Application** button.

+2. Click APPLICATIONS in the title bar, and on the **My Applications** page that opens, click **OIDC** and then the {{

diff --git a/content/nginx/deployment-guides/single-sign-on/oidc-njs/ping-identity.md b/content/nginx/deployment-guides/single-sign-on/oidc-njs/ping-identity.md

index d4901c65a..495b2ebad 100644

--- a/content/nginx/deployment-guides/single-sign-on/oidc-njs/ping-identity.md

+++ b/content/nginx/deployment-guides/single-sign-on/oidc-njs/ping-identity.md

@@ -56,30 +56,30 @@ Create a new application for NGINX Plus:

1. Log in to your Ping Identity account. The administrative dashboard opens automatically. In this guide, we show the PingOne for Enterprise dashboard, and for brevity refer simply to ”PingOne”.

-2. Click APPLICATIONS in the title bar, and on the **My Applications** page that opens, click **OIDC** and then the **+ Add Application** button.

+2. Click APPLICATIONS in the title bar, and on the **My Applications** page that opens, click **OIDC** and then the {{ -3. The **Add OIDC Application** window pops up. Click the ADVANCED CONFIGURATION box, and then the Next button.

+3. The {{

-3. The **Add OIDC Application** window pops up. Click the ADVANCED CONFIGURATION box, and then the Next button.

+3. The {{ -4. In section 1 (PROVIDE DETAILS ABOUT YOUR APPLICATION), type a name in the **APPLICATION NAME** field and a short description in the **SHORT DESCRIPTION** field. Here, we're using **nginx‑plus‑application** and **NGINX Plus**. Choose a value from the **CATEGORY** drop‑down menu; here we’re using **Information Technology**. You can also add an icon if you wish. Click the Next button.

+4. In section 1 (PROVIDE DETAILS ABOUT YOUR APPLICATION), type a name in the **APPLICATION NAME** field and a short description in the **SHORT DESCRIPTION** field. Here, we're using {{

-4. In section 1 (PROVIDE DETAILS ABOUT YOUR APPLICATION), type a name in the **APPLICATION NAME** field and a short description in the **SHORT DESCRIPTION** field. Here, we're using **nginx‑plus‑application** and **NGINX Plus**. Choose a value from the **CATEGORY** drop‑down menu; here we’re using **Information Technology**. You can also add an icon if you wish. Click the Next button.

+4. In section 1 (PROVIDE DETAILS ABOUT YOUR APPLICATION), type a name in the **APPLICATION NAME** field and a short description in the **SHORT DESCRIPTION** field. Here, we're using {{ 5. In section 2 (AUTHORIZATION SETTINGS), perform these steps:

- 1. Under **GRANTS**, click both **Authorization Code** and **Implicit**.

5. In section 2 (AUTHORIZATION SETTINGS), perform these steps:

- 1. Under **GRANTS**, click both **Authorization Code** and **Implicit**.- 2. Under **CREDENTIALS**, click the **+ Add Secret** button. PingOne creates a client secret and opens the **CLIENT SECRETS** field to display it, as shown in the screenshot. To see the actual value of the secret, click the eye icon.

+ 1. Under **GRANTS**, click both {{

+ 2. Under **CREDENTIALS**, click the {{

3. Click the Next button.

6. In section 3 (SSO FLOW AND AUTHENTICATION SETTINGS):

- 1. In the **START SSO URL** field, type the URL where users access your application. Here we’re using **https://example.com**.

- 2. In the **REDIRECT URIS** field, type the URI of the NGINX Plus instance including the port number, and ending in **/\_codexch**. Here we’re using **https://my‑nginx‑plus.example.com:443/\_codexch** (the full value is not visible in the screenshot).

+ 1. In the {{

6. In section 3 (SSO FLOW AND AUTHENTICATION SETTINGS):

- 1. In the **START SSO URL** field, type the URL where users access your application. Here we’re using **https://example.com**.

- 2. In the **REDIRECT URIS** field, type the URI of the NGINX Plus instance including the port number, and ending in **/\_codexch**. Here we’re using **https://my‑nginx‑plus.example.com:443/\_codexch** (the full value is not visible in the screenshot).

+ 1. In the {{ -7. In section 4 (DEFAULT USER PROFILE ATTRIBUTE CONTRACT), optionally add attributes to the required **sub** and **idpid** attributes, by clicking the **+ Add Attribute** button. We’re not adding any in this example. When finished, click the Next button.

+7. In section 4 (DEFAULT USER PROFILE ATTRIBUTE CONTRACT), optionally add attributes to the required **sub** and **idpid** attributes, by clicking the {{

-7. In section 4 (DEFAULT USER PROFILE ATTRIBUTE CONTRACT), optionally add attributes to the required **sub** and **idpid** attributes, by clicking the **+ Add Attribute** button. We’re not adding any in this example. When finished, click the Next button.

+7. In section 4 (DEFAULT USER PROFILE ATTRIBUTE CONTRACT), optionally add attributes to the required **sub** and **idpid** attributes, by clicking the {{ -8. In section 5 (CONNECT SCOPES), click the circled plus-sign on the **OpenID Profile (profile)** and **OpenID Profile Email (email)** scopes in the **LIST OF SCOPES** column. They are moved to the **CONNECTED SCOPES** column, as shown in the screenshot. Click the Next button.

+8. In section 5 (CONNECT SCOPES), click the circled plus-sign on the {{

-8. In section 5 (CONNECT SCOPES), click the circled plus-sign on the **OpenID Profile (profile)** and **OpenID Profile Email (email)** scopes in the **LIST OF SCOPES** column. They are moved to the **CONNECTED SCOPES** column, as shown in the screenshot. Click the Next button.

+8. In section 5 (CONNECT SCOPES), click the circled plus-sign on the {{ @@ -107,14 +107,14 @@ Create a new application for NGINX Plus:

@@ -107,14 +107,14 @@ Create a new application for NGINX Plus:

-11. You are returned to the **My Applications** window, which now includes a row for **nginx‑plus‑application**. Click the toggle switch at the right end of the row to the “on” position, as shown in the screenshot. Then click the “expand” icon at the end of the row, to display the application’s details.

+11. You are returned to the **My Applications** window, which now includes a row for {{

-11. You are returned to the **My Applications** window, which now includes a row for **nginx‑plus‑application**. Click the toggle switch at the right end of the row to the “on” position, as shown in the screenshot. Then click the “expand” icon at the end of the row, to display the application’s details.

+11. You are returned to the **My Applications** window, which now includes a row for {{ 12. On the page that opens, make note of the values in the following fields on the **Details** tab. You will add them to the NGINX Plus configuration in [Step 4 of _Configuring NGINX Plus_](#nginx-plus-variables).

- - **CLIENT ID** (in the screenshot, **28823604‑83c5‑4608‑88da‑c73fff9c607a**)

+ - **CLIENT ID** (in the screenshot, {{

12. On the page that opens, make note of the values in the following fields on the **Details** tab. You will add them to the NGINX Plus configuration in [Step 4 of _Configuring NGINX Plus_](#nginx-plus-variables).

- - **CLIENT ID** (in the screenshot, **28823604‑83c5‑4608‑88da‑c73fff9c607a**)

+ - **CLIENT ID** (in the screenshot, {{ @@ -124,7 +124,7 @@ Create a new application for NGINX Plus:

Configure NGINX Plus as the OpenID Connect relying party:

-1. Create a clone of the [**nginx‑openid‑connect**](https://github.com/nginxinc/nginx-openid-connect) GitHub repository.

+1. Create a clone of the {{

@@ -124,7 +124,7 @@ Create a new application for NGINX Plus:

Configure NGINX Plus as the OpenID Connect relying party:

-1. Create a clone of the [**nginx‑openid‑connect**](https://github.com/nginxinc/nginx-openid-connect) GitHub repository.

+1. Create a clone of the {{

+ {{ $PageTitle := .Title }}

+

+

+ {{end}}

+

+

+

+ {{ if or (lt .WordCount 1) (eq $PageTitle "F5 NGINX One Console") (eq $PageTitle "F5 NGINX App Protect DoS") (eq $PageTitle "F5 NGINX Plus") }}

+

+

+ + {{ .Title }} +

+ {{ if .Description }} ++ {{ .Description | markdownify }} +

+ {{ end}} + {{ if .Content }} ++ {{ .Content | markdownify }} +

+ {{ end }} +

+

+

+ {{ range .Pages.GroupBy "Section" }}

+ {{ range .Pages.ByWeight }}

+

+ {{ if eq $PageTitle "F5 NGINX One Console" }}

+

+

+ {{ end }}

+ {{ end }}

+

+

+ + + {{ .Title }} +

+ {{ if and (eq $PageTitle "F5 NGINX One Console") (eq .Title "Manage your NGINX fleet")}} +Simplify, scale, secure, and collaborate with your NGINX fleet

+ {{ end }} + {{ if and (eq $PageTitle "F5 NGINX One Console") (eq .Title "Get started")}} +See benefits from the NGINX One Console

+ {{ end }} + {{ if and (eq $PageTitle "F5 NGINX One Console") (eq .Title "Draft new configurations")}} +Work with Staged Configurations

+ {{ end }} + + {{ if and (eq $PageTitle "F5 NGINX One Console") (eq .Title "Manage your NGINX instances")}} +Monitor and maintain your deployments

+ {{ end }} + {{ if and (eq $PageTitle "F5 NGINX One Console") (eq .Title "Organize users with RBAC")}} +Assign responsibilities with role-based access control

+ {{ end }} + {{ if and (eq $PageTitle "F5 NGINX One Console") (eq .Title "Automate with the NGINX One API")}} +Manage your NGINX fleet over REST

+ {{ end }} + {{ if and (eq $PageTitle "F5 NGINX One Console") (eq .Title "Glossary")}} +Learn terms unique to NGINX One Console

+ {{ end }} + {{ if and (eq $PageTitle "F5 NGINX One Console") (eq .Title "Connect your instances") }} +Work with data plane keys, containers, and proxy servers

+ {{ end }} + {{ if and (eq $PageTitle "F5 NGINX One Console") (eq .Title "Set up metrics") }} +Review your deployments in a dashboard

+ {{ end }} + {{ if and (eq $PageTitle "F5 NGINX One Console") (eq .Title "API")}} +These are API docs

+-

+ {{ range .Pages }}

+

- {{ .Title }} + {{ end }} +

Other Products

+ {{ $nginxProducts := slice + (dict "title" "NGINX Instance Manager" "url" "/nginx-instance-manager" "imgSrc" "NGINX-Instance-Manager-product-icon" "type" "local-console-option" "description" "Track and control NGINX Open Source and NGINX Plus instances.") + (dict "title" "NGINX Ingress Controller" "url" "/nginx-ingress-controller" "imgSrc" "NGINX-Ingress-Controller-product-icon" "type" "kubernetes-solutions" "description" "Kubernetes traffic management with API gateway, identity, and observability features.") + (dict "title" "NGINX Gateway Fabric" "url" "/nginx-gateway-fabric" "imgSrc" "NGINX-product-icon" "type" "kubernetes-solutions" "description" "Next generation Kubernetes connectivity using the Gateway API.") + (dict "title" "NGINX App Protect WAF" "url" "/nginx-app-protect-waf" "imgSrc" "NGINX-App-Protect-WAF-product-icon" "type" "security" "description" "Lightweight, high-performance, advanced protection against Layer 7 attacks on your apps and APIs.") + (dict "title" "NGINX App Protect DoS" "url" "/nginx-app-protect-dos" "imgSrc" "NGINX-App-Protect-DoS-product-icon" "type" "security" "description" "Defend, adapt, and mitigate against Layer 7 denial-of-service attacks on your apps and APIs.") + (dict "title" "NGINX Plus" "url" "/nginx" "imgSrc" "NGINX-Plus-product-icon-RGB" "type" "modern-app-delivery" "description" "The all-in-one load balancer, reverse proxy, web server, content cache, and API gateway.") + (dict "title" "NGINX Open Source" "url" "https://nginx.org/en/docs/" "imgSrc" "NGINX-product-icon" "type" "modern-app-delivery" "description" "The open source all-in-one load balancer, content cache, and web server") + }} + {{ $groupedProducts := dict + "local-console-option" (where $nginxProducts "type" "local-console-option") + "kubernetes-solutions" (where $nginxProducts "type" "kubernetes-solutions") + "security" (where $nginxProducts "type" "security") + "modern-app-delivery" (where $nginxProducts "type" "modern-app-delivery") + }} + {{ range $type, $products := $groupedProducts }} +

+

+ {{ end }}

+ {{ end }}

+ {{ $type | humanize | title }}

+ {{ range $products }} +

+

+ {{ end }}

+

+

+

+

+

+  + {{ .title }}

+

+ {{ .title }}

+

+ + {{ if .description }}{{ .description | markdownify }}{{ end }} +

+