Protect AI workflows from bad data with one line of code.

ADRI is a small Python library that enforces data quality before data reaches an AI agent step. It turns data assumptions into executable data contracts, and applies them automatically at runtime.

No platform. No services. Runs locally in your project.

from adri import adri_protected

@adri_protected(contract="customer_data", data_param="data")

def process_customers(data):

# Your agent logic here

return resultsADRI provides:

- A decorator to guard a function or agent step

- A CLI for setup and inspection

- A reusable library of contract templates

pip install adri

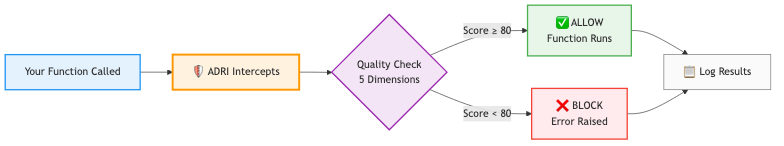

adri setup- ADRI inspects the input data

- Creates a data contract (stored as YAML)

- Saves local artifacts for debugging/inspection

- Incoming data is checked against the contract

- ADRI calculates quality scores across 5 dimensions

- Based on your settings, it either:

- allows execution, or

- blocks execution (raises)

In plain English: ADRI sits between your code and its data, checking quality before letting data through. Good data passes, bad data gets blocked.

from adri import adri_protected

import pandas as pd

@adri_protected(contract="customer_data", data_param="customer_data")

def analyze_customers(customer_data):

"""Your AI agent logic."""

print(f"Analyzing {len(customer_data)} customers")

return {"status": "complete"}

# First run with good data

customers = pd.DataFrame({

"id": [1, 2, 3],

"email": ["user1@example.com", "user2@example.com", "user3@example.com"],

"signup_date": ["2024-01-01", "2024-01-02", "2024-01-03"]

})

analyze_customers(customers) # ✅ Runs, auto-generates contractWhat happened:

- Function executed successfully

- ADRI analyzed the data structure

- Generated a YAML contract under your project

- Future runs validate against that contract

Future runs with bad data:

bad_customers = pd.DataFrame({

"id": [1, 2, None], # Missing ID

"email": ["user1@example.com", "invalid-email", "user3@example.com"], # Bad email

# Missing signup_date column

})

analyze_customers(bad_customers) # ❌ Raises exception with quality report- Quickstart – 2-minute integration

- Getting started – tutorial

- How it works – quality dimensions explained

- Data contracts – concept + examples

- Contracts library – reusable templates

- Framework patterns – LangChain, CrewAI, AutoGen, etc.

- CLI reference – CLI commands

- FAQ – common questions

- Examples – real-world examples

# Raise mode (default) - blocks bad data by raising an exception

@adri_protected(contract="data", data_param="data", on_failure="raise")

# Warn mode - logs warning but continues execution

@adri_protected(contract="data", data_param="data", on_failure="warn")

# Continue mode - silently continues

@adri_protected(contract="data", data_param="data", on_failure="continue")ADRI includes reusable contract templates for common domains and AI workflows.

- Star the project: https://github.com/adri-standard/adri

- Share feedback/requests in Discussions: https://github.com/adri-standard/adri/discussions

- Contribute new contracts and examples: CONTRIBUTING.md

ADRI works with any data format. Sample data files are included for common scenarios:

Protect your API integrations with structural validation.

- Sample:

api_response.json

Validate context passed between agents in CrewAI, AutoGen, etc.

- Sample:

crew_context.json

Ensure documents have correct structure before indexing.

- Sample:

rag_documents.json

Apache 2.0. See LICENSE.

Built with ❤️ by Thomas Russell at Verodat.

One line of code. Local enforcement. Reliable agents.