Jialv Zou1, Bencheng Liao2,1, Qian Zhang3, Wenyu Liu1, Xinggang Wang1,📧

1 School of EIC, HUST, 2 Institute of Artificial Intelligence, HUST, 3 Horizon Robotics

(📧) corresponding author.

[2025-3-19]: We release the initial version of code and weight, along with documentation and training/inference scripts.

[2025-3-12]: OmniMamba arXiv paper released. Code and Weight are coming soon. Please stay tuned! ☕️

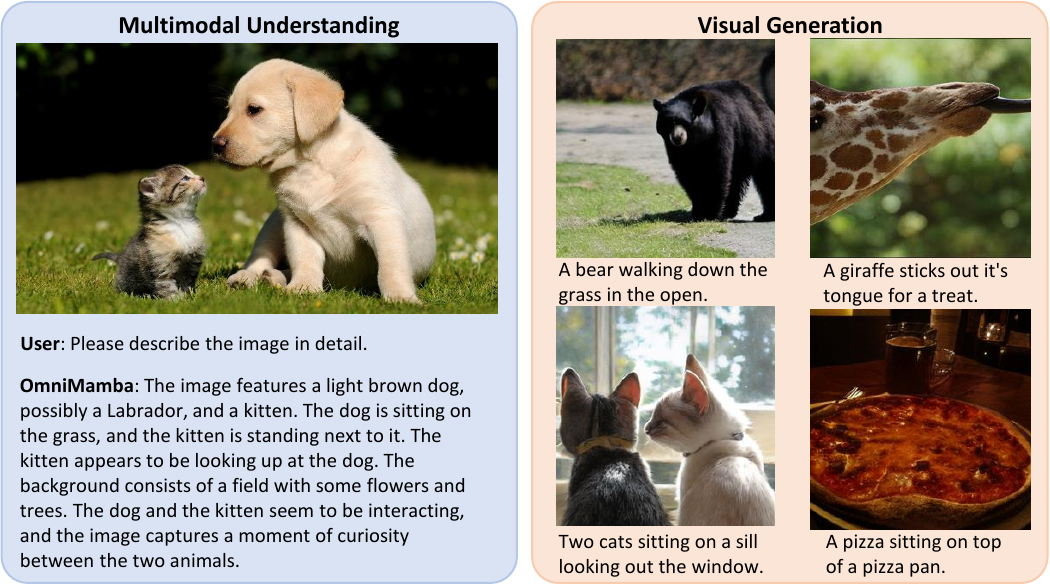

- To the best of our knowledge, OmniMamba is the first linear model based unified multimodal understanding and visual generation model.

- OmniMamba achieves competitive performance with only 2M data for training.

- OmniMamba is highly efficient, achieving up to a 119.2 times speedup and 63% GPU memory reduction for long-sequence generation compared to Transformer-based counterparts.

- Clone this repository and navigate to OmniMamba folder

git clone https://github.com/hustvl/OmniMamba

cd OmniMamba- Install Package

# Install PyTorch (with CUDA 11.8) before everything else. those assume you are using cu118

conda create -n omnimamba python=3.10 -y

conda activate omnimamba

pip install torch==2.2.1 torchvision==0.17.1 torchaudio==2.2.1 --index-url https://download.pytorch.org/whl/cu118

pip install -r requirements.txt

wandb loginPlease download our pretrained model at OmniMamba-1.3b

Multimodal Understanding

python scripts/inference_mmu.py --image_path mmu_validation/cat_dog.png --question 'Please describe it in detail.'

Visual Generation

python scripts/inference_t2i.py --prompt 'A bed in a bedroom between two lamps.'

ShareGPT4V: Please refer to the document of the TinyLLaVA to download the dataset without SAM for pretrain.

LVIS-Instruct-4V: Please refer to the document

LRV-Instruct: Please refer to the document

You can download our preprocessed JSON file on Hugging Face.

Folder structure

OmniMamba

├── dataset/

│ ├── pretokenized_coco_train2014.jsonl

│ ├── llava/

│ │ ├── gqa/

│ │ ├── LAION-CC-SBU/

│ │ ├── ocr_vqa/

│ │ ├── POPE/

│ │ ├── share_textvqa/

│ │ ├── textvqa/

│ │ ├── vg/

│ │ ├── web-celebrity/

│ │ ├── web-landmark/

│ │ ├── wikiart/

│ │ ├── coco/

│ │ ├── lrv_Instruct/

│ │ ├── share-captioner_coco_lcs_676k_1121.json

│ │ ├── sharegpt4v_llava_v1_5_lvis4v_lrv_mix1231k.json

Stage 1: MMU Pre-Training

accelerate launch --mixed_precision=bf16 --machine_rank=0 --num_processes=8 --num_machines=1 --main_process_port=8888 train_stage2.py --config config/config_stage1_mmu.yaml

Stage 1: T2I Pre-Training

accelerate launch --mixed_precision=bf16 --machine_rank=0 --num_processes=8 --num_machines=1 --main_process_port=8888 train_stage2.py --config config/config_stage1_t2i.yaml

Stage 2: Unifid Fine-Tuning

accelerate launch --mixed_precision=bf16 --machine_rank=0 --num_processes=8 --num_machines=1 --main_process_port=8888 train_stage2.py --config config/config_stage2.yaml

We build our project based on

Thanks for their great works.

If you find OmniMamba useful in your research or applications, please consider giving us a star 🌟 and citing it by the following BibTeX entry.

@misc{zou2025omnimambaefficientunifiedmultimodal,

title={OmniMamba: Efficient and Unified Multimodal Understanding and Generation via State Space Models},

author={Jialv Zou and Bencheng Liao and Qian Zhang and Wenyu Liu and Xinggang Wang},

year={2025},

eprint={2503.08686},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2503.08686},

}