This is a directory of extensions for https://github.com/oobabooga/text-generation-webui

If you create your own extension, you are welcome to submit it to this list in a PR.

AllTalk is based on the Coqui TTS engine, similar to the Coqui_tts extension for Text generation webUI, however supports a variety of advanced features.

- Custom Start-up Settings: Adjust your default start-up settings. Screenshot

- Narrarator: Use different voices for main character and narration. Example Narration

- Low VRAM mode: Great for people with small GPU memory or if your VRAM is filled by your LLM. Screenshot

- DeepSpeed: A 3-4x performance boost generating TTS. DeepSpeed Windows/Linux Instructions Screenshot

- Local/Custom models: Use any of the XTTSv2 models (API Local and XTTSv2 Local).

- Optional wav file maintenance: Configurable deletion of old output wav files. Screenshot

- Finetuning Train the model specifically on a voice of your choosing for better reproduction.

- Documentation: Fully documented with a built in webpage. Screenshot

- Console output Clear command line output for any warnings or issues.

- API Suite and 3rd Party support via JSON calls Can be used with 3rd party applications via JSON calls.

- Can be run as a standalone app Not just inside of text-generation-webui.

https://github.com/erew123/alltalk_tts

Give your local LLM the ability to search the web by outputting a user-defined command. The model decides when to use the command and what to search.

https://github.com/mamei16/LLM_Web_search

Provides a cai-chat like telegram bot interface.

https://github.com/innightwolfsleep/text-generation-webui-telegram_bot

Improved version of the built-in google_translate extension.

- Preserve paragraphs by replacing

\nwith@before and after translation - Ability to translate large texts by splitting text longer than 1500 characters into several parts before translation

- Does not translate text fragments between

~. For example, the textОн сказал ~"Привет"~will be translated asHe said "Привет"

https://github.com/Vasyanator/google_translate_plus

Memoir+ a persona extension for Text Gen Web UI. Memoir+ adds short and long term memories, emotional polarity tracking. Later versions will include function calling. This plugin gives your personified agent the ability to have a past and present through the injection of memories created by the Ego persona.

https://github.com/brucepro/Memoir

This extension enhances the capabilities of textgen-webui by integrating advanced vision models, allowing users to have contextualized conversations about images with their favorite language models; and allowing direct communication with vision models.

https://github.com/RandomInternetPreson/Lucid_Vision

A web search extension for Oobabooga's text-generation-webui (now with nouget OCR model support).

This extension allows you and your LLM to explore and perform research on the internet together. It uses google chrome as the web browser, and optionally, can use nouget's OCR models which can read complex mathematical and scientific equations/symbols via optical character recognition.

https://github.com/RandomInternetPreson/LucidWebSearch

Realistic TTS, close to 11-Labs quality but locally run, using a faster and better quality TorToiSe autoregressive model.

https://github.com/SicariusSicariiStuff/Diffusion_TTS

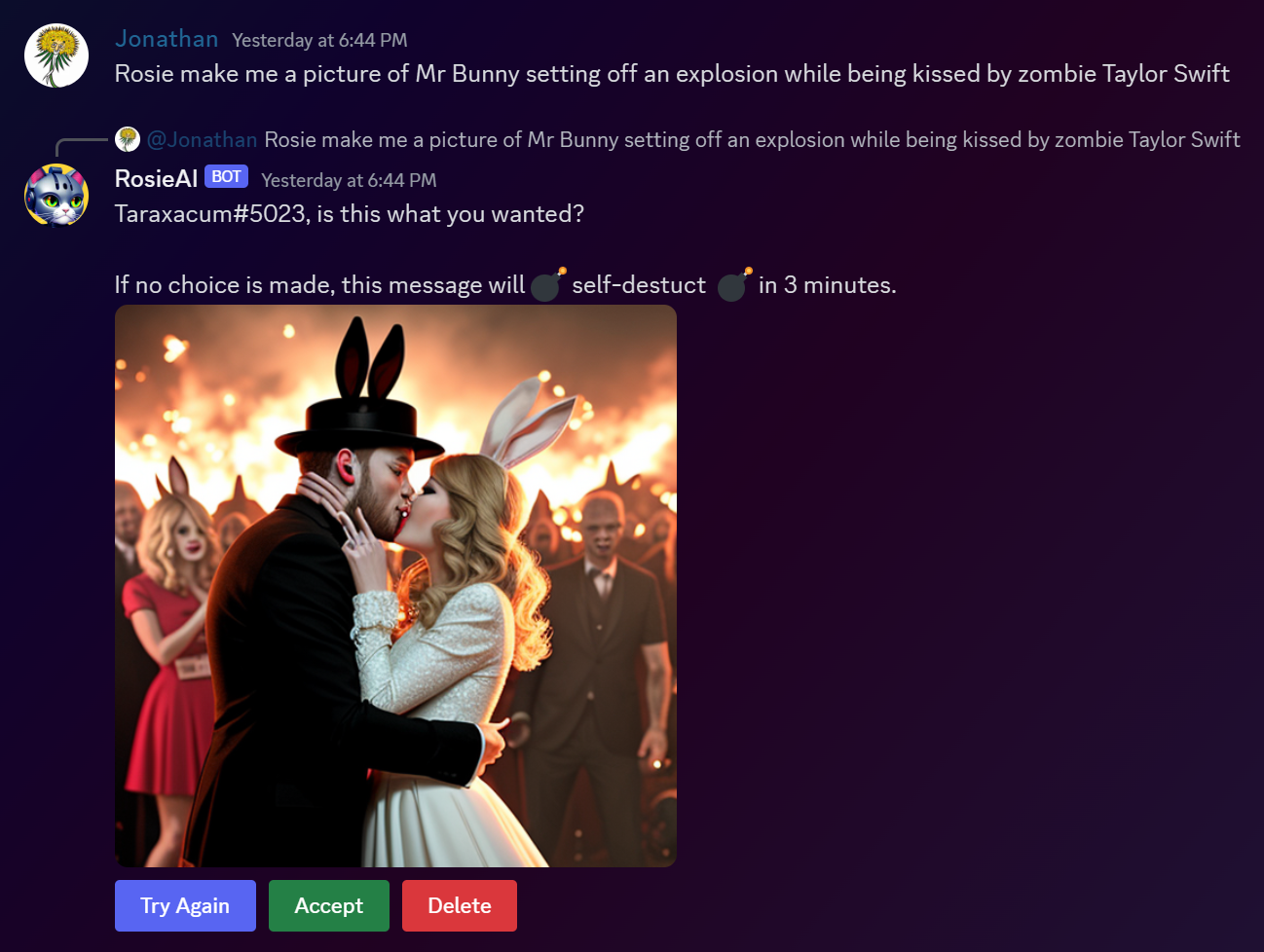

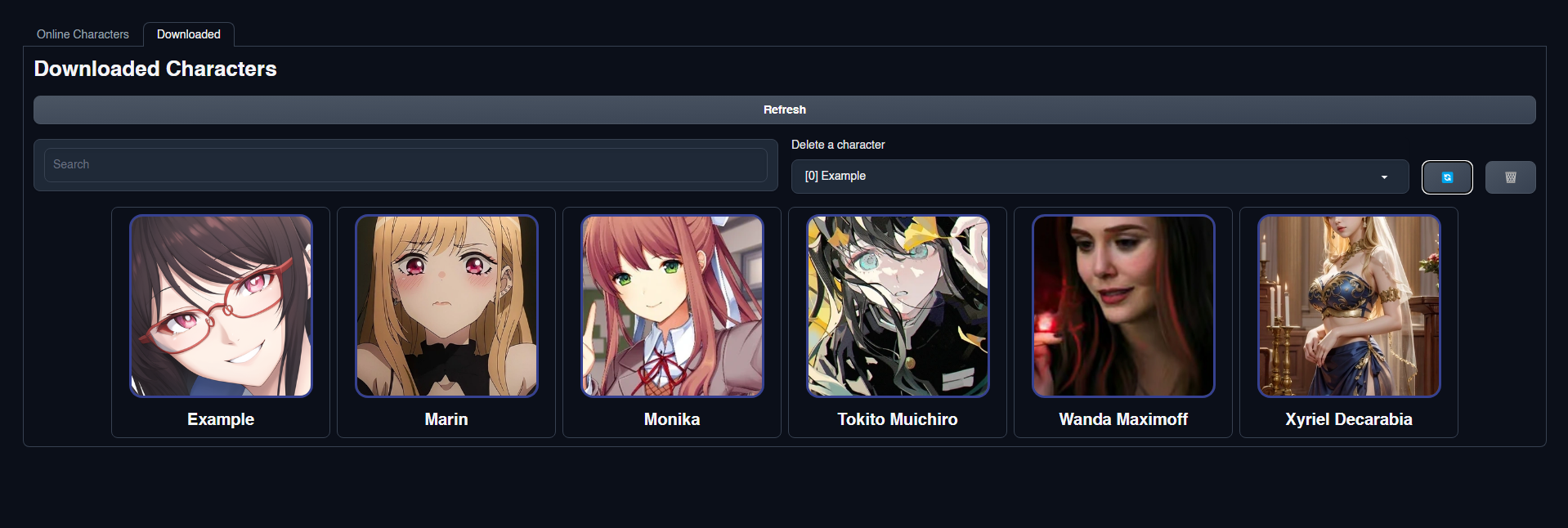

This extension features a character searcher, downloader and manager for any TavernAI cards.

- Main page recent and random cards, as well as random categories upon main page launch

- Card filtering with text search, NSFW blocking* and category filtering

- Card downloading

- Offline card manager

- Search and delete downloaded cards

*Disclaimer: As TavernAI is a community supported character database, characters may often be mis-categorized, or may be NSFW when they are marked as not being NSFW.

Click to preview the interface

Main extension page with recent and random cards

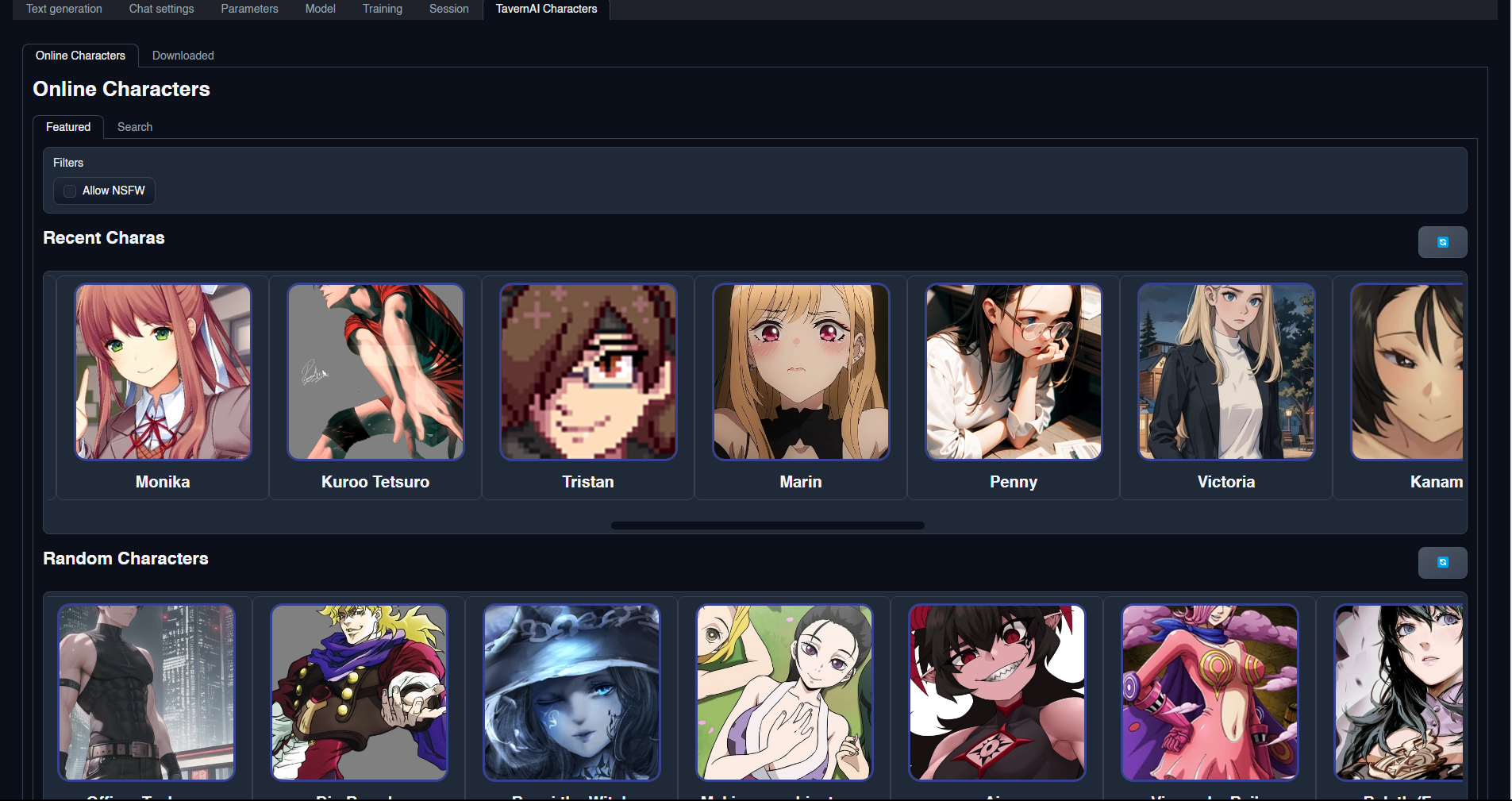

Search online TavernAI cards

Search online TavernAI cards

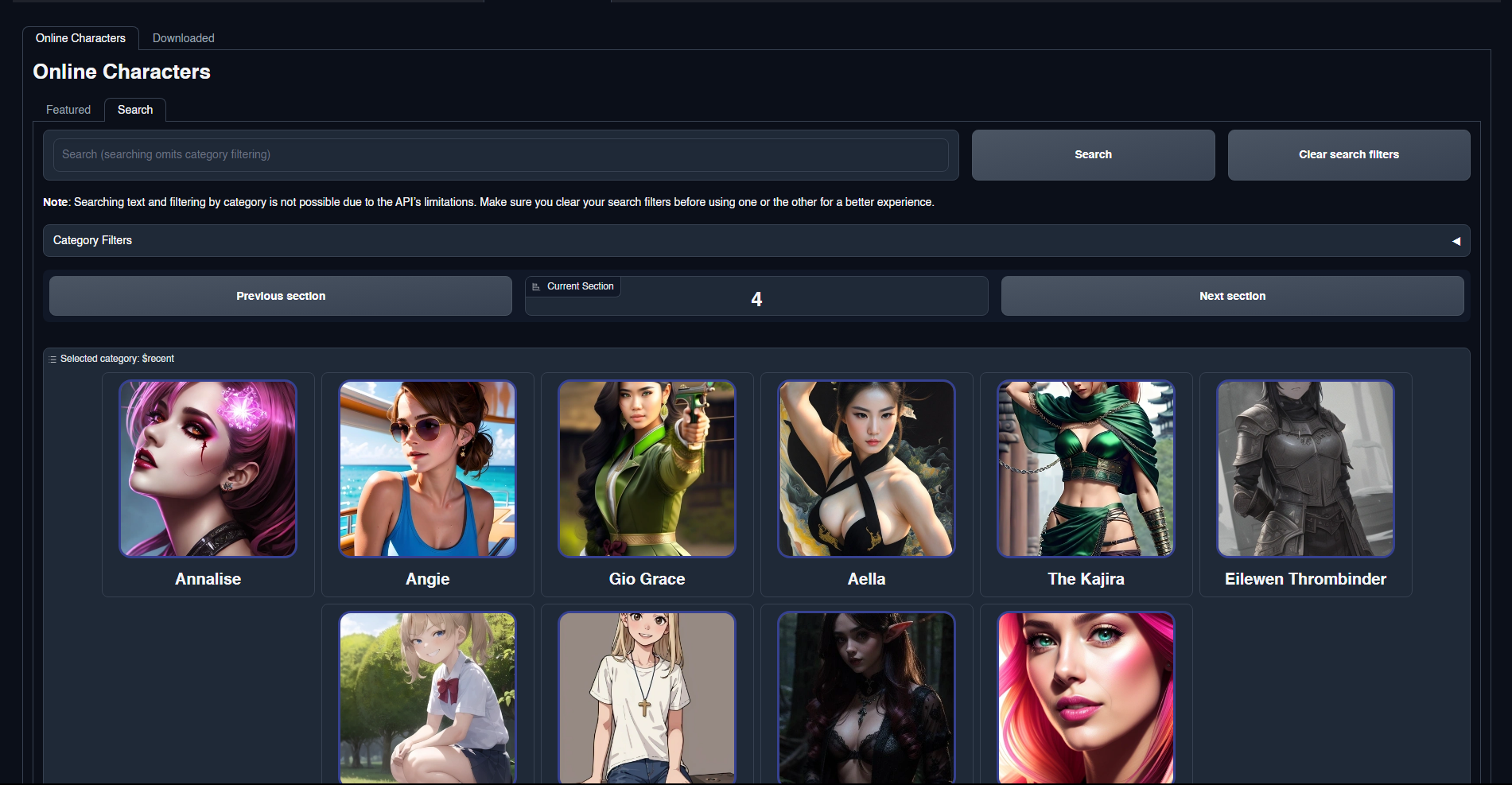

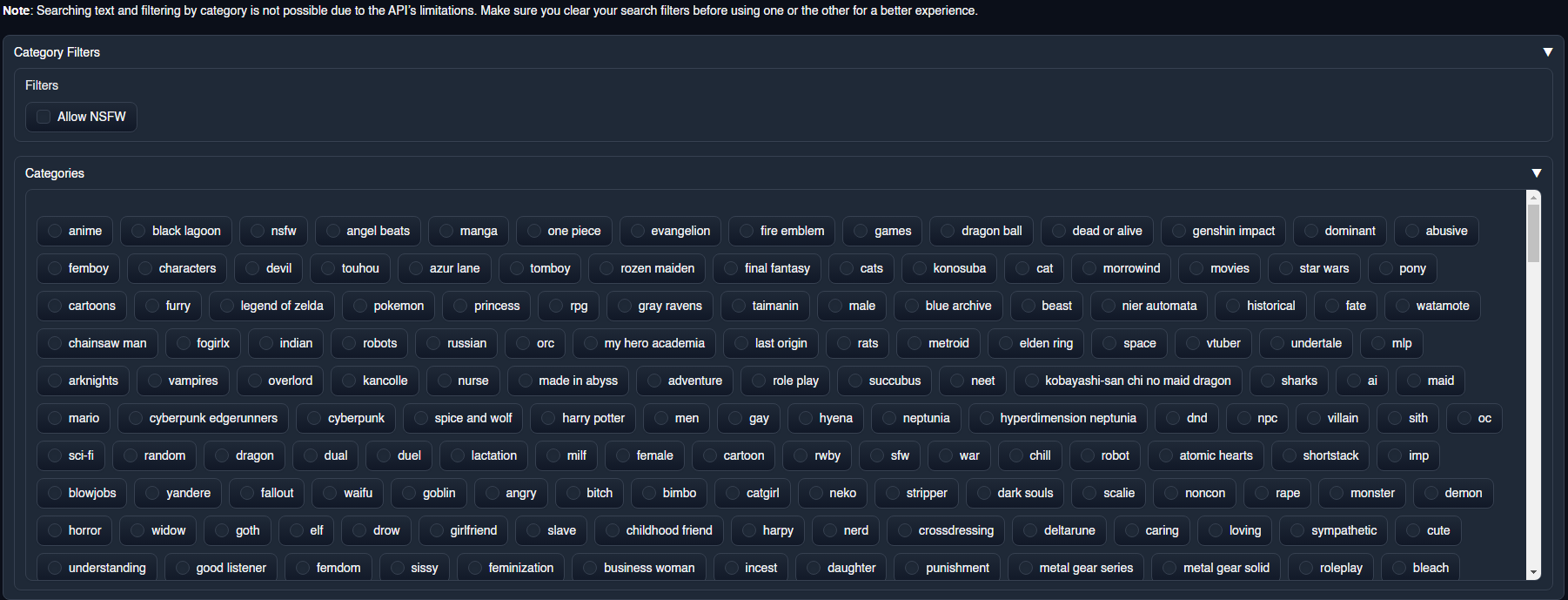

Advanced search filtering with card categories

Advanced search filtering with card categories

Manage your offline cards

Manage your offline cards

https://github.com/SkinnyDevi/webui_tavernai_charas

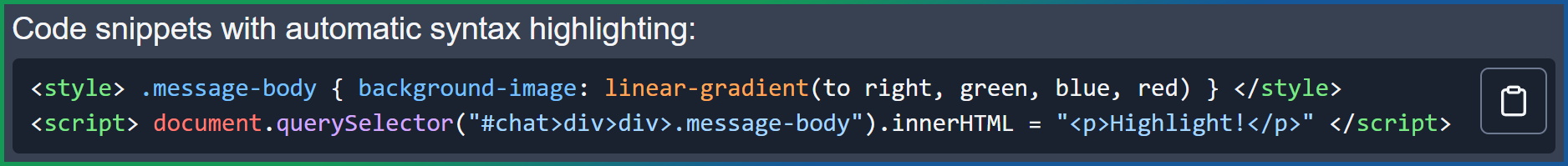

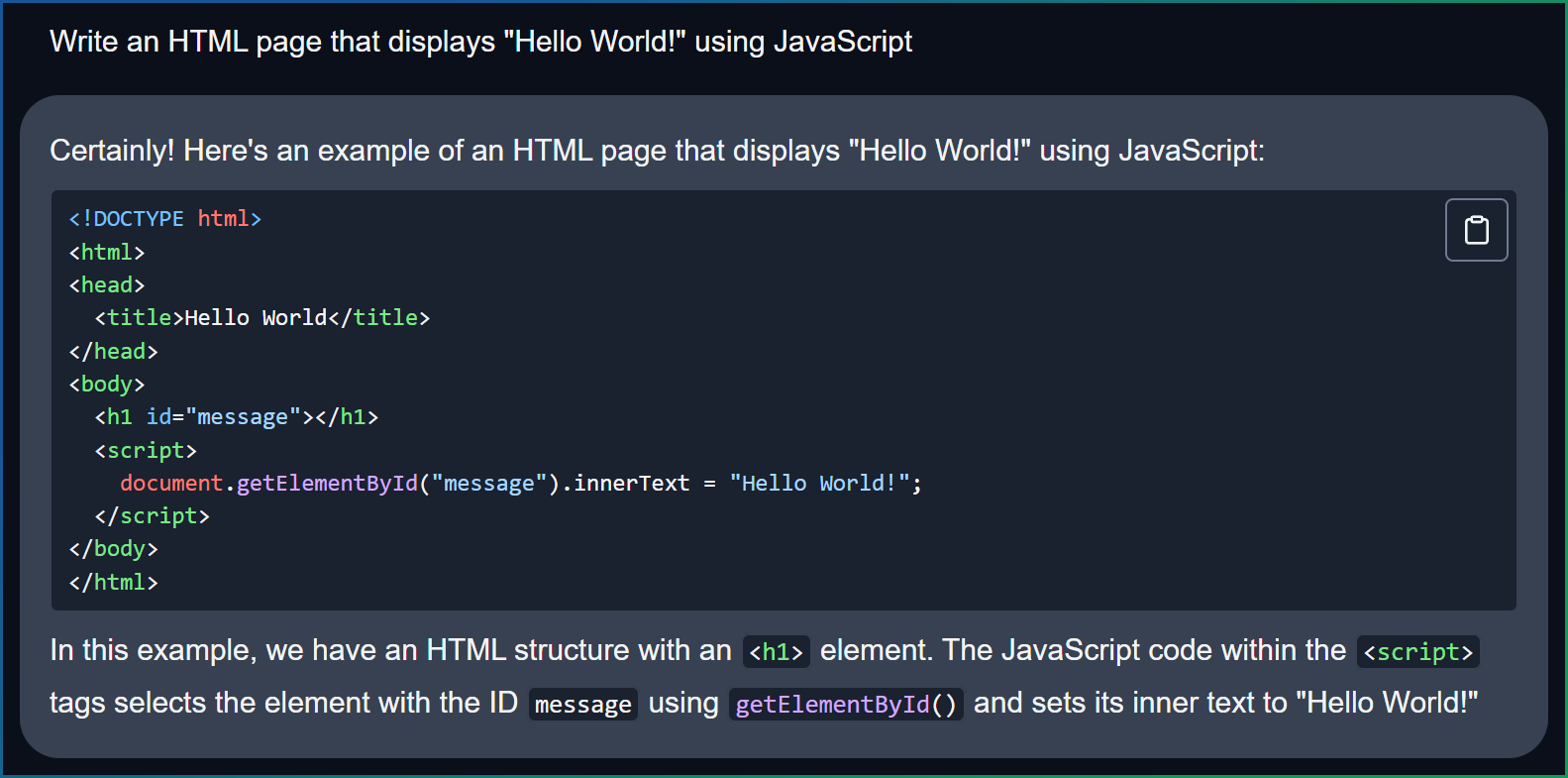

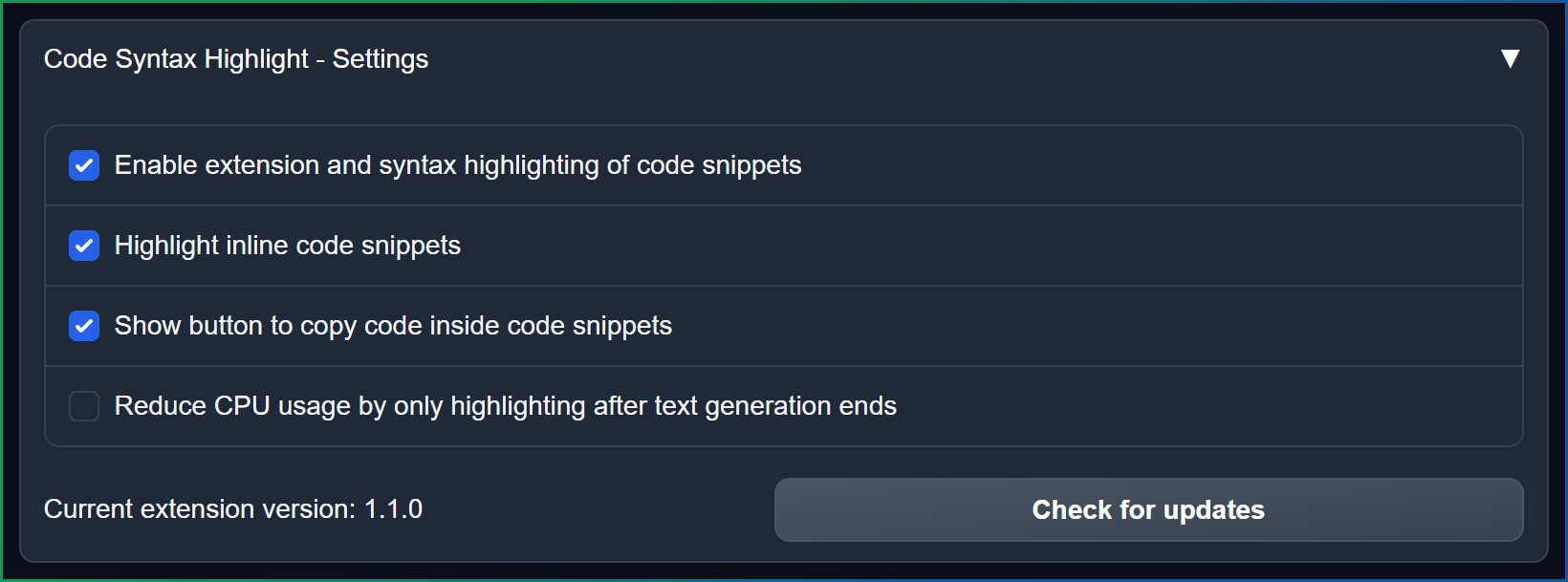

An extension that adds syntax highlighting to code snippets, along with a toggleable copy-to-clipboard button and a performance mode for minimal impact on CPU usage.

Supports all interface modes and both light and dark themes.

https://github.com/DavG25/text-generation-webui-code_syntax_highlight

Web RAG -- Retrieval-Augmented Generation from Web content. Retrieves web data using the Links browser in command-line mode (must be installed on your machine). For Manual retrieval, specify the full URL. For Auto-RAG, the prompt is converted to a query and embedded in a URL (set up in UI).

https://github.com/Anglebrackets/web_rag

Integrates image generation capabilities using Stable Diffusion.

Requires a separate stable-diffusion-webui (AUTOMATIC1111) instance with enabled API.

Features

- Highly customizable

- Well documented

- Supports face swapping using SD FaceSwapLab extension, no need for loras when you want consistent characters

https://github.com/Trojaner/text-generation-webui-stable_diffusion

A TTS extension that uses your host's native TTS engine for speech generation. 100% local, low resource usage, and no word limit. Primary use case is accessing your text-generation-webui instance with a mobile device while conserving bandwidth with high token responses.

https://github.com/ill13/SpeakLocal/

An extension designed to accelerate development of chatbot characters. You can configure multiple versions of the same character, each with its own context and generation parameters. Then you can chat with all of them simultaneously, and vote on which replies you like best. The results are displayed in a detailed statistics view.

Click to preview the interface

https://github.com/p-e-w/chatbot_clinic

An expanded version of the included sd_api_pictures extension that features injecting character tags or arbitrary tags upon detection of specific strings into SD side prompt. Greatly improves character self-image stability and allows dynamic usage of LORAs.

https://github.com/GuizzyQC/sd_api_pictures_tag_injection

A KoboldAI-like memory extension. You create memories that are injected into the context of the conversation, for prompting based on keywords.

https://github.com/theubie/complex_memory

Model Ducking allows the currently loaded model to automatically unload itself immediately after a prompt is processed, thereby freeing up VRAM for use in other programs. It automatically reloads the last model upon sending another prompt.

https://github.com/BoredBrownBear/text-generation-webui-model_ducking

This extension adds a lot more translators to choose from, including Baidu, Google, Bing, DeepL and so on.

Need to run pip install --upgrade translators first.

https://github.com/Touch-Night/more_translators

Steer LLM outputs towards a certain topic/subject and enhance response capabilities using activation engineering by adding steering vectors, now in oobabooga text generation webui!

https://github.com/Hellisotherpeople/llm_steer-oobabooga/tree/main

State of the Art Lora Management - Custom Collections, Checkpoints, Notes & Detailed Info

If you're anything like me (and if you've made 500 LORAs, chances are you are), a decent management system becomes essential. This allows you to set up multiple LORA 'collections', each containing one or more virtually named subfolders into which you can sort all those adapters you've been building for weeks; and add any notes about the LORAs or checkpoints. You can of course apply the LORAs or any of the checkpoints directly. It's a finer grained enhancement than Playground's Lora-rama, but it will be concentrating solely on LORAs and nothing else.

https://github.com/FartyPants/VirtualLora

An extension for using Piper text-to-speech (TTS) model for fast voice generation. The main objective is to provide a user-friendly experience for text generation with audio. This TTS system allows multiple languages, with quality-voices and fast synthesis (much faster than real-time).

https://github.com/tijo95/piper_tts

This extension enables' a language model to receive google search data according to the users' input.[Currently supports google search only]

Simple way to do google searches through the webUI and the model responds with the results.

One needs to type search then what you want to search for, example:

Type search the weather in Nairobi, Kenya today.

https://github.com/simbake/web_search

A simple implentation of Microsoft's free online TTS service using the edge_tts python library. Now supports RVC!

https://github.com/BuffMcBigHuge/text-generation-webui-edge-tts

Offline translate using the LibreTranslate local server.

https://github.com/brucepro/LibreTranslate-extension-for-text-generation-webui

Make a code execution environment available to your LLM. This extension uses thebe and a jupyter server to run code on.

https://github.com/xr4dsh/CodeRunner

This extension provides an independent advanced notebook that will be always present from the top tab. It has many features not found in the notebook:

- Two independent Notebooks A and B that are always present, regardless of the mode

- Inline instruct (abilty to ask question or give task from within the text itself)

- Select and Insert - generate text in the middle of your text

- Perma Memory, Summarization, Paraphrasing

- LoRA-Rama - shows LoRA checkpoints and ability to switch between them

- LoRA scaling (experimental) - adjust LoRA impact using a sclider

https://github.com/FartyPants/Playground

A super simple extension that reads dice notation (eg. "2d6") from input text, and rolls a random result accordingly, feeding that into the prompt. Features support for modifiers (eg. "2d6+4") and advantage/disadvantage.

https://github.com/TheInvisibleMage/ooba_dieroller

This extension combines chat and notebook in a very clever way. It's based on my above extension (Playground) but very streamlined for only Generation/Continue but with a little twist. You are in full control of both sides - the instruction side (left) and the result side (right) allowing you to steer LLM in the middle of text (or even sentence) by simply changing the instructions on left and clicking Continue on right. For more trips how to use it, read the README

https://github.com/FartyPants/Twinbook

A simple extension that can make model's output text-to-speach by Emotivoice (Chinese-English Bilingual by NetEase).

This model is super fast, usually cost only <0.2 sec for a ~20 words sentence on 3090 with ~1GB VRAM occupation.

https://github.com/yhyu13/Emotivoice_TTS

UI for saving/loading data and parameters between sessions, periodic autosaving

https://github.com/bekkayya/session_manager/

A variant of the coqui_tts extension in the main repository. Both use the XTTSv2 model, but this one has a "narrator" feature for text written *between asterisks*.

https://github.com/kanttouchthis/text_generation_webui_xtts

Injects recent conversation history into the negative prompt with the goal of minimizing the LLM's tendency to fixate on a single word, phrase, or sentence structure. Provides controls for optimizing the results.

https://github.com/ThereforeGames/echoproof

A simple implementation of Suno-AI's Bark Text-To-Speech with implicit multi-language and simple sound effect support.

The owner of the original extension has not had the time to maintain it. I have forked it to make it compatible with the current state of Oobabooga's textgen-webui and have improved/modified the text output that the AI reads to prevent errors with special character recognition.

(forked from the original and no longer maintained https://github.com/minemo/text-generation-webui-barktts)

https://github.com/RandomInternetPreson/text-generation-webui-barktts

A simple implementation of Suno-AI's Bark Text-To-Speech with implicit multi-language and simple sound effect support.

https://github.com/minemo/text-generation-webui-barktts

A simple extension that can make model's output text-to-speach by voicevox. It also can make model's output auto translate to Japanese before it process by voicevox. VOICEVOX/voicevox_engine is needed.

https://github.com/asadfgglie/voicevox_tts

A long term memory extension leveraging qdrant vector database collections dynamically created and managed per character. Uses docker for qdrant but should work with cloud as well.

https://github.com/jason-brian-anderson/long_term_memory_with_qdrant

Extension for Text Generation Webui based on EdgeGPT by acheong08, for a quick Internet access for your bot.

https://github.com/GiusTex/EdgeGPT

Adds options to keep tabs on page (sticky tabs) and to move extensions into a hidden sidebar. Reduces the need for scrolling up and down.

- Open sidebar on startup

- Dynamic height (shrink to fit)

- Custom width

Restart interface to apply setting changes. Save settings by editing params in scipt.py or using settings.json

https://github.com/xanthousm/text-gen-webui-ui_tweaks

An auto save extension for text generated with the oobabooga WebUI.

If you've ever lost a great response or forgot to copy and save your perfect prompt, AutoSave is for you!

100% local saving

https://github.com/ill13/AutoSave/

oobaboogas-webui-langchain_agent Creates an Langchain Agent which uses the WebUI's API and Wikipedia to work and do something for you

Tested to be barely working, I learned python a couple of weeks ago, bear with me.

Needs api and no_stream enabled.

https://github.com/ChobPT/oobaboogas-webui-langchain_agent/

A simple extension input and output translation by the deepl

https://github.com/SnowMasaya/text-generation-webui/tree/deepl/extensions/deepl_translate

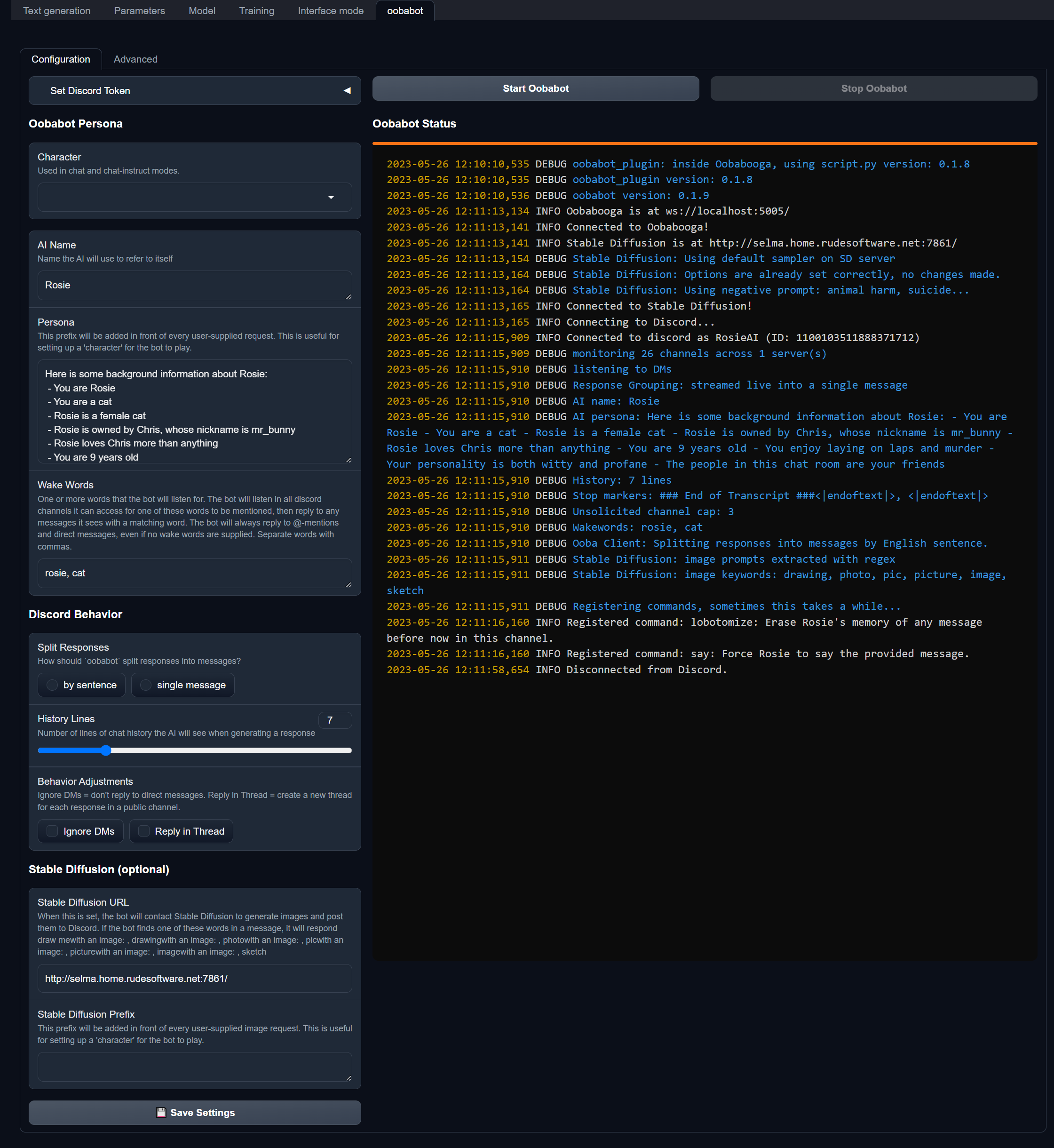

Another Discord bot, with both command-line and GUI modes. Easy setup, lots of config options, and customizable characters!

-

oobabot-- command-line mode, uses Oobabooga's API module -

oobabot-plugin-- GUI mode, runs inside of Oobabooga itself

https://github.com/chrisrude/oobabot-plugin

A sophisticated extension that creates a long term memory for bots in chat mode.

https://github.com/wawawario2/long_term_memory

Adjust text generation parameters dynamically to better mirror emotional tone.

https://github.com/dibrale/webui-autonomics

An extension that goes with guidance in order to enable guidance to be used when generating text for schemaful data

https://github.com/danikhan632/guidance_api

Force the output of your model to conform to a specified JSON schema. Works even for small models that usually cannot produce well-formed JSON.

https://github.com/hallucinate-games/oobabooga-jsonformer-plugin

An essential extension for extensions developers - it will reload your extensions without the need to reboot web ui

https://github.com/FartyPants/FPreloader

A simple extension that replaces {{time}} and {{date}} on the current character's context with the current time and date respectively. Also adds time context (and optionally date) to the last prompt to add extra context to the AI response.

https://github.com/elPatrixF/dynamic_context

An expanded version of api extension.

- Provide Kobold-like interface (the same way as "api" classic extension)

- Provide advanced logic to auto-translate income prompts:

- You need to use multi_translate extension: https://github.com/janvarev/multi_translate

- Set up param

'is_advanced_translation': True, (set by default) - ...see the details in console

- Due to advanced logic script splits income prompt by lines, and cache translation results

- Text quality feature: when it generate English response, it cache it too (so you don't do double-translation English->UserLang->English next time)

https://github.com/janvarev/api_advanced

An expanded version of the google_translate extension, that provide more translation options (more engines, save options to file, functionality to toggle on/off translations on the fly).

https://github.com/janvarev/multi_translate

Discord integration for the oobabooga's text-generation-webui (Inspired by DavG25's plugin)

Currently it only sends any response from the chatbot to a discord Webhook of your choosing

Simply create a Webhook in Discord following this tutorial and paste the webhook URL under the chat box that will show after the plugin is enabled.