This code is based on the original pytorch DCGAN example, modified to output 128x128 images instead of 64x64 in the original example.

A convolution layer has been added to the generator network and to the discriminator network so that they respectively output and discriminate on 128x128 images. To stabilize training, at each epoch gaussian noise is added to the current training data batch. The number of features of the generator has been increased from 64 to 256 on the generator, but kept to 64 on the discriminator. This seemed to avoid falling into a collapsed mode.

The parameters have been adapted to run on a GeForce 1060 6G. Batch size can be increased if more memory is available, but this sometimes bring instability to the training process. Learning rate should be decreased if this happens.

Images are from ImageNet's cloud synset.

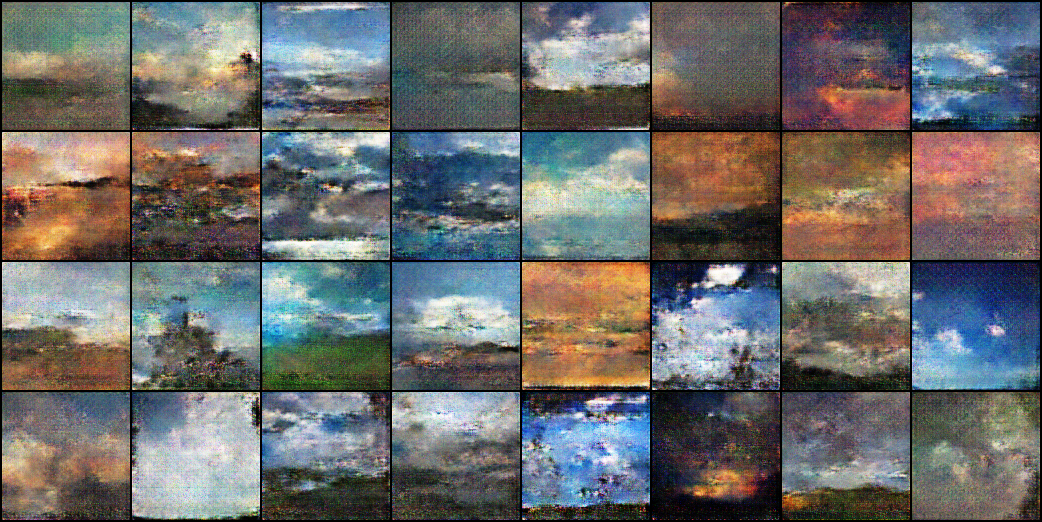

Images generated by randomly sampling the representation space after training the networks for 700 epochs:

Fixed random vector on the representation space while training: