Hongjia Zhai · Hai Li · Zhenzhe Li · Xiaokun Pan · Yijia He · Guofeng Zhang

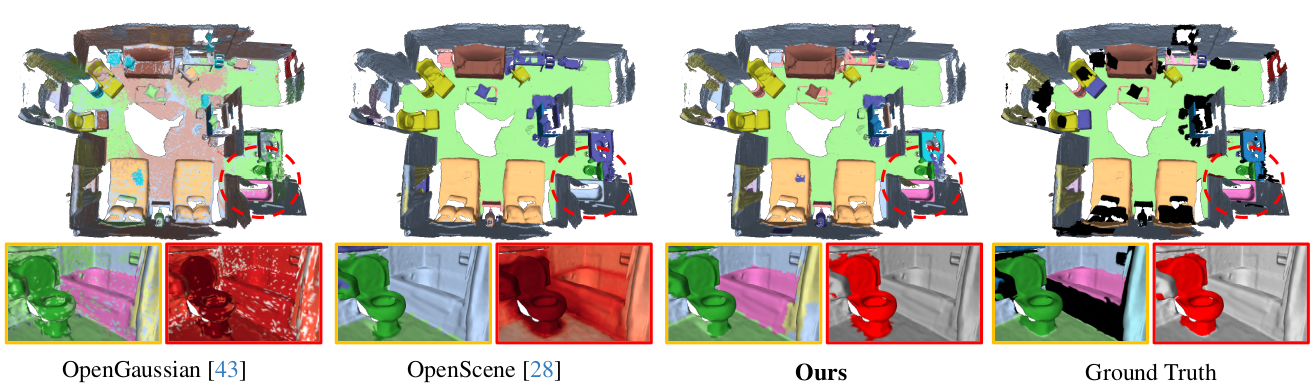

We present PanoGS, a novel and effective 3D panoptic open vocabulary scene understanding approach. Technically, to learn accurate 3D language features that can scale to large indoor scenarios, we adopt the pyramid tri-plane to model the latent continuous parametric feature space and use a 3D feature decoder to regress the multi-view fused 2D feature cloud. Besides, we propose language-guided graph cuts that synergistically leverage reconstructed geometry and learned language cues to group 3D Gaussian primitives into a set of super-primitives. To obtain 3D consistent instance, we perform graph clustering based segmentation with SAM-guided edge affinity computation between different super-primitives.

The code is currently being reorganized and will be open sourced within two weeks.

We sincerely thank the following excellent projects, from which our work has greatly benefited.

If you found this code/work to be useful in your own research, please considering citing the following:

@inproceedings{panogs,

title={{PanoGS}: Gaussian-based Panoptic Segmentation for 3D Open Vocabulary Scene Understanding},

author={Zhai, Hongjia and Li, Hai and Li, Zhenzhe and Pan, Xiaokun and He, Yijia and Zhang, Guofeng},

booktitle={IEEE/CVF Conference on Computer Vision and Pattern Recognition},

year={2025},

}